In this piece, I will guide you through my thought process while conducting an investigation, aiming to identify and collect sources of intelligence. Specifically, we will focus on utilizing open-source intelligence, Our primary goal is to monitor intelligence information from the following sources: ‘markets’ and ‘some shady activities.’ While I will present a case I worked on some time ago, I won’t provide details about the case itself. Instead, I will discuss the tools I used and how to conduct a hunt and collect intelligence and analysis techniques. As you join me on this journey, I hope you don’t get lost in my thought process.

We will shift our focus more on OSINT, Dark Web Analysis for Threat Intelligence, Scouring Forums and Marketplaces, How to Infiltrate These Forums, mining Threat Intelligence, as well as utilizing Search Engines, and Blockchain Analytics.

Anatomy of the Dark Web

Alright, what is the dark web?

If you are here, then you likely know what the dark web is. Still, let’s briefly detail it. The Internet can be better understood if visualized to contain three: the surface web, the deep web, and finally, the dark web.

We know about the surface web: this part is what we surf through daily; an example would be reading through this article. It is indexed by search engines, which means that generally anyone can find it.

Then, there is the deep web. This area is colossal in comparison with the surface web and contains everything that those search engines cannot view. This information typically for direct links to access. Another common mix-up one can make is confusing the deep web with the dark web. Well, they are not the same; basically, the dark web just represents a tiny portion of the deep web, and it’s meant to deliberately remain under the wraps.

Everything from illegal marketplaces to hacking forums and hidden services has given the dark web its reputation. Anonymity is the key characteristic of this web, and it actively attracts those who seek privacy.

How Does Tor Work?

The dark web is, of course, powered by an onion-encrypted network called Tor. Onion routing works by wrapping your internet traffic in layers of encryption, just like an onion has several layers of skin. Your data jumps between multiple layers as it reaches its destination, making it unreadable to anyone snooping.

TOR routes information through a network of volunteer-operated nodes and relays located everywhere around the world. Every time your data packet hops from node to node, only that node can tell where it came from and where it is heading. All of this in a series of leaps, so your identity can remain hidden, and what you do online will remain private.

The exit nodes are the last stop your data will take before it actually reaches its destination. The encrypted packet is decrypted here and sent on its merry way. It is important to know, though, that the only places this decryption occurs are at the exit nodes. This makes the setup ensure that no single node can see the source and destination of your data. The Tor network, short for the web-like structure of redirection and encryption, is a tangled web that makes the origin and the entire action of an online user practically untraceable.

Case Study

Alright, so the case was simple. The profile we were hunting and their operation had already been investigated by other researchers, but our task was simply the process of collecting, analyzing, and disseminating actionable information(TTPs), When I received the case, there was already some internal research available, so it was easy to focus solely on the profiles and fingerprints of their activity across the web, and to uncover any hidden connections about the operation (TTPs). So, it was a simple assignment.

So, like any assignment, you have a name or domain of interest and the information to get you started. First, I was assigned to identify the relationship between two onion sites and demonstrate that they are owned by the same entity or individual. Plus, I was tasked with tracing their blockchain fingerprints to a registered cryptocurrency exchange.

Simple, right? However, as you proceed through the investigation and hunt, you uncover a lot more information and details that may have been ignored or missed, and you have to decide how to proceed.

Keep this in mind as we cover more. As you can see below, we have two targets: one is a marketplace, the other is more like a personal service. These sites are collected and analyzed to determine the most likely explanation for a given set of data, which may indicate they are run by the same individual or entity, as they share a lot of characteristics and other small details. However, this is still just an assumption. We need facts and proof that they are indeed run or operated under the same actor, and that’s what we’re going to do.

There are several methodologies for analyzing CTI once it has been collected.

-

Evaluating multiple competing hypotheses to determine the most likely explanation for a given set of data. This has already been done.

-

Identifying and challenging the assumptions underlying an intelligence assessment, ensuring that they are well-founded and not based on unfounded beliefs or biases.

+---------+--------------------------------------------------------------------+

| Site | Description |

+---------+--------------------------------------------------------------------+

| Site A | Operates as market, facilitating the sale of hacking |

| | tools and personal identifying information (PII). |

+---------+--------------------------------------------------------------------+

| Site B | Appears to be a platform offering hacking services. |

+---------+--------------------------------------------------------------------+

Alright, time to dig into this, Some researchers try to gather as much information as possible so they can analyze and organize data later. However, I like to see this from an offensive standpoint “Where they messed up?” A simple detail or vulnerability that I can use to de-anonymize the operation or launch an offensive attack on the target. This is not the typical mindset of a threat researcher, but it has always worked for me. So, the first step is information gathering. Like I said, we already have some research done, but I’d like to go over the information and conduct my own research.

To conduct an investigation, you need a profile, most likely a cybercriminal one. You don’t have to do anything illegal; you just need to appear as one. For that, you’ll need credibility, such as being an existing member of forums to vouch for you, or you can pay for entry. If we talk about forums, establishing connections and using social engineering to infiltrate forums and establish a reputation can be your foothold. Alternatively, all this can be bypassed by targeting accounts with reputation and credibility, and using them as puppets with no need for a profile. There are many accounts out there, especially in these “dark web” forums, that can be easily taken over. Since we’re not dealing with a multi-million-dollar company that has its own red team, But depending on who you ask, this may be fallen under “Legality and Ethics” Hacking laws differ across jurisdictions;

Something that is more important is to not take (OPSEC) lightly in a hunt or investigation. Always practice good OPSEC practices and follow the process. Be aware of what you’re doing and how you interact and move through these sites, and what you click on. Simply put, it boils down to one thing: Control over information and actions to prevent any attempts at turning them against you. CALI (Capabilities, Activities, Limitations, and Intentions) is a simple checklist outlining the operation’s must-haves.

But how does this relate to the site itself? Since you’re not investigating a forum, how does this come into play? Well, this will come into play once you identify persons of interest who run or claim to run this site, and many of them have accounts across different TOR-based forums. But let’s not jump the gun. Let’s start by performing a simple information gathering on site A.

Info Gathering & Research

So, Site A. Your casual TOR-based Marketplace. However, the site was relatively new, not so popular, but had been running for some time with a low profile. It was the usual suspects: wildlife sales, phishing toolkits, stolen identification, and so on.

The first step is to crawl and collect data from sites. This involves collecting and searching for specific keywords and returning metadata to discover new links and content, possible emails, and Bitcoin addresses. Additionally, performing service fingerprinting on discovered services is crucial. However, for now, let’s focus on data collection.

Lucky for us, there are crawlers designed to collect data from sites on the Tor network. For example, TorBot is a tool that can crawl any URL hosted on the Tor network and save website data to an output JSON file. Another tool called TorCrawl can also crawl any URL on the Tor network. In addition to saving site data to an output HTML file, it can search for specific keywords and return metadata about the crawl itself.

There are other tools available as well. I usually leverage Selenium for its flexibility to address the unique authentication requirements of various marketplaces and to gather the exact data we needed for our analysis of illicit digital goods. For example, I gather and crawl domains in engines like Ahamia or those posted in other CTI sharing researcher communities.

The list goes on; it can encompass thousands of different types of sites to run checks on those that are active and those that are not. This way, you can have a kind of a phone book that gets you where you need to go, There’s so much you can pull, but let’s get back to our subject, Site A.

Once I’ve extracted links and endpoints present in them at the time, I shift my focus to usually look for URLs with parameters like foo.php?id=2. I run a simple fuzz on files (pdf, png, xml, etc.) and intel (emails, BTC, ETH, etc.). I can’t really tell you how many vulnerabilities from outdated packages or outdated software are used in such sites, so usually, you look for SQL injection, directory traversal, and more on this later. But once the data is gathered and you have an idea about the overall site, You move to the other site “B” and do exactly the same. Once you’ve gathered data, you start to cross-reference intel and build a report. Usually, at this time, I start noticing the resemblance between the two sites – the same technology is used, but also some small details. All this data must be captured and reported as you conduct an investigation; it’s like you’re writing a penetration report. As you move, you document what’s going on because at times, .onion sites often go down for prolonged periods of time or entirely disappear.

Alright, we’re trying to find a link between two different onion sites. Now, past the information gathering phase, we start by identifying the administrators and popular vendors of site “A”. So, we begin by creating an intelligence profile, taking note of the following:

- Username / Alias

- Date of account creation / Online, Offline (map out an activity pattern)

- PGP public key (Important! Reused keys indicate related accounts)

- Type of merchandise offered

- Methods of contact

Now, it’s time to translate the intel we collected into actors, events, and attributes. First things first, remember that thing about the offensive approach? Well, in the data, I noticed a couple of things.

The site “B” is running on the ‘Apache web server,’ which is fairly standard. However, what gets interesting is that the site operator seems to have forgotten to disable the Apache status module, also known as mod_status or server-status. This module provides information about the requests Apache is currently serving and has recently served. like:

The time the server was last started/restarted and the duration it has been running and Details about the current hosts and requests being processed.

And more other juicy intel, but we keep moving as per the playbook. So, two profiles of interest showed up in the data regarding site “A”: one labeled as Admin and another as a vendor. Both were engaged in multiple activities like leaking sensitive data and such. I decided to focus more on gathering information about these profiles.

My goal is to determine whether I can link them to any profiles or accounts on other sites or forums. Starting with the administration of Site A, it is now time to cross-reference that intelligence profile and search for any useful information. I was able to trace the username across various forums, one of which is xss[.]is. In case you’re unfamiliar, “xss is a Russian forum that hosts discussions on vulnerabilities, exploitation, malware, and various other cyber-related topics.” great forum.

To thoroughly scope the profile, I initiated a quick crawl to retrieve a link. Upon checking the link, I received no response, indicating that the site is no longer operational. Plus, the profile associated with it has been inactive since the last recorded activity in 2021, which mean’s the link is down and the profile was create before all this mess, So I run the site through WebBackMachine to see if any snapshots are available,

The site contains snapshots dating back to approximately 2018, with additional snapshots from 2019, 2020, and 2021.

As we go into the snapshots, we discover a subdomain labeled ‘Sub’ and ‘Services.’ Interestingly, it turns out that the site was offering (Pen-Testing) services before it went offline, What’s even more intriguing is that before it transformed into a service, it started as a personal blog in the 2018 snapshot. This blog featured articles on hacking, tools, and related topics.

As we carefully examine all of this, we managed to collect the following information:

+-------------------------------------------+----------------------------------+

| Site A | Site B |

+-------------------------------------------+----------------------------------+

| Bitcoin Address | Bitcoin Address |

| A name linked to the administration | Email Address |

| alias | |

| Country of residence | |

| Email Address | |

+-------------------------------------------+----------------------------------+

Now that we’ve managed to collect and analyze some valuable information on the administration of Site A, and we know that the Admin has an interest in hacking services, we still need a key that links the site to it. I did some research and hunted for the email address to see where they’re connected and what services they’ve been using, which all led me to a set of digital footprints of a guy across the web, from social networks like LinkedIn to a YouTube channel and so on. With a possible identification of the owner of Site A, it’s time to examine the evidence provided by the bitcoin addresses.

Blockchain Forensics On Transactions

From a single Bitcoin address, various insights can be derived, including the total number of transactions, the sources and amounts of incoming funds, the destinations and amounts of outgoing funds, a historical timeline of transactions, and identification of other associated Bitcoin addresses within the same wallet. This is where the websites Wallet Explorer and OTX become relevant and come into play.

Usually, the first approach is to start looking for patterns and correlations to link multiple addresses. We also map the flow of funds and relationships between addresses to uncover suspicious activities or money laundering schemes, and extract and analyze additional data associated with transactions, timestamps to gain further insights.

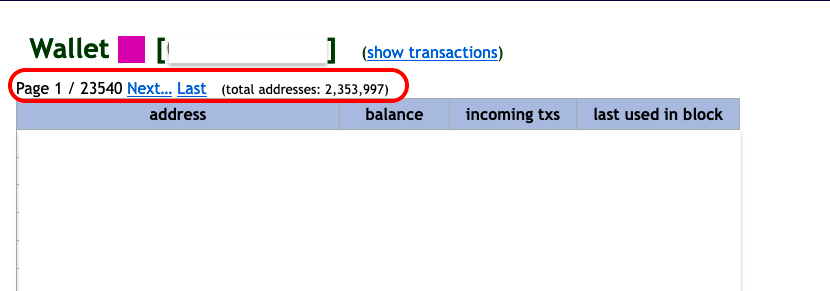

With these tools, we are able to identify any other bitcoin addresses owned by the same wallet.

When we input the address into the explorer, the displayed data includes transaction records, each with specific information like dates and the amounts sent or received. Notably, one of the transactions received funds from an unfamiliar sender (address beginning with “06f”), allowing us to discern the shared ownership of these addresses and subsequently unveil the complete wallet.

With a transaction history dating back to 2019, we now have a time frame that matches our investigation. Let’s proceed to scrutinize the individual transactions associated with each of these Bitcoin addresses.

These two sites are related since their bitcoin addresses come from the same wallet, confirming that the individuals behind them are the same.

Transaction History explores how funds have moved in and out of the address, potentially revealing patterns or connections to other addresses.

Most of the transactions paid into these accounts resemble normal transactions when viewed on the blockchain. However, upon further examination, some transactions involve multiple addresses, possibly indicating the use of a bitcoin mixing service. This is normal, as many actors use a mixing service, or cryptocurrency tumbler, to guarantee anonymity by essentially scrambling the addresses and the payments made.

Likely, the bitcoin address is of an exchange, or it may be a well-used bitcoin tumbling service, explaining the large volume of bitcoin addresses it holds in its wallet, allowing it to essentially scramble transactions.

Here, we have successfully conducted research to establish the relationship between the two sites, confirming that they are indeed owned by the same person. Additionally, we have tracked down the market administration. However, it’s important to note that using open-source information on the dark web and the blockchain can only take you so far.

So, we’ve pieced together information from different places to uncover connections between seemingly unrelated things, revealing shady activities. I hope you’ve learned something from this, Until next time.